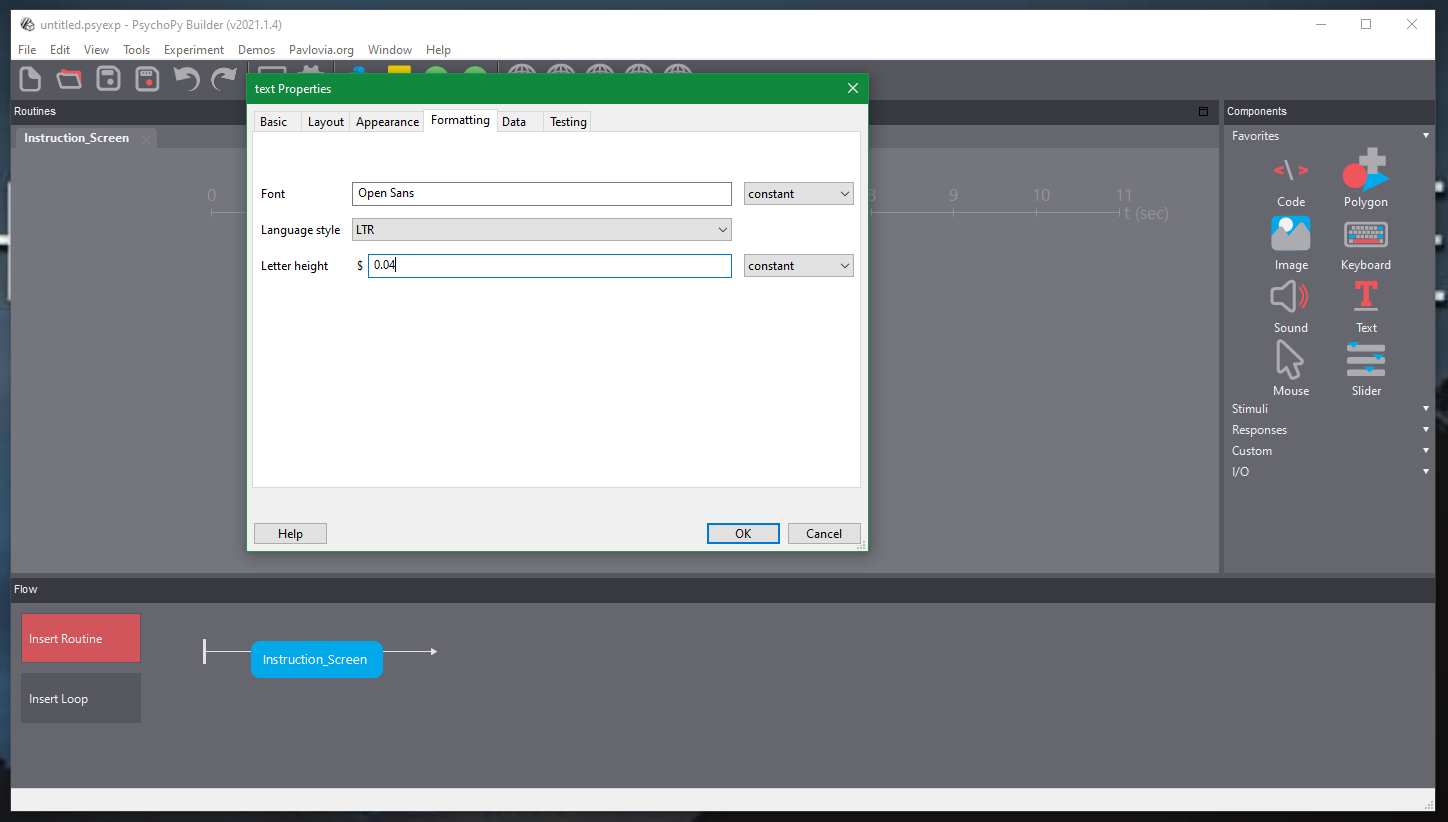

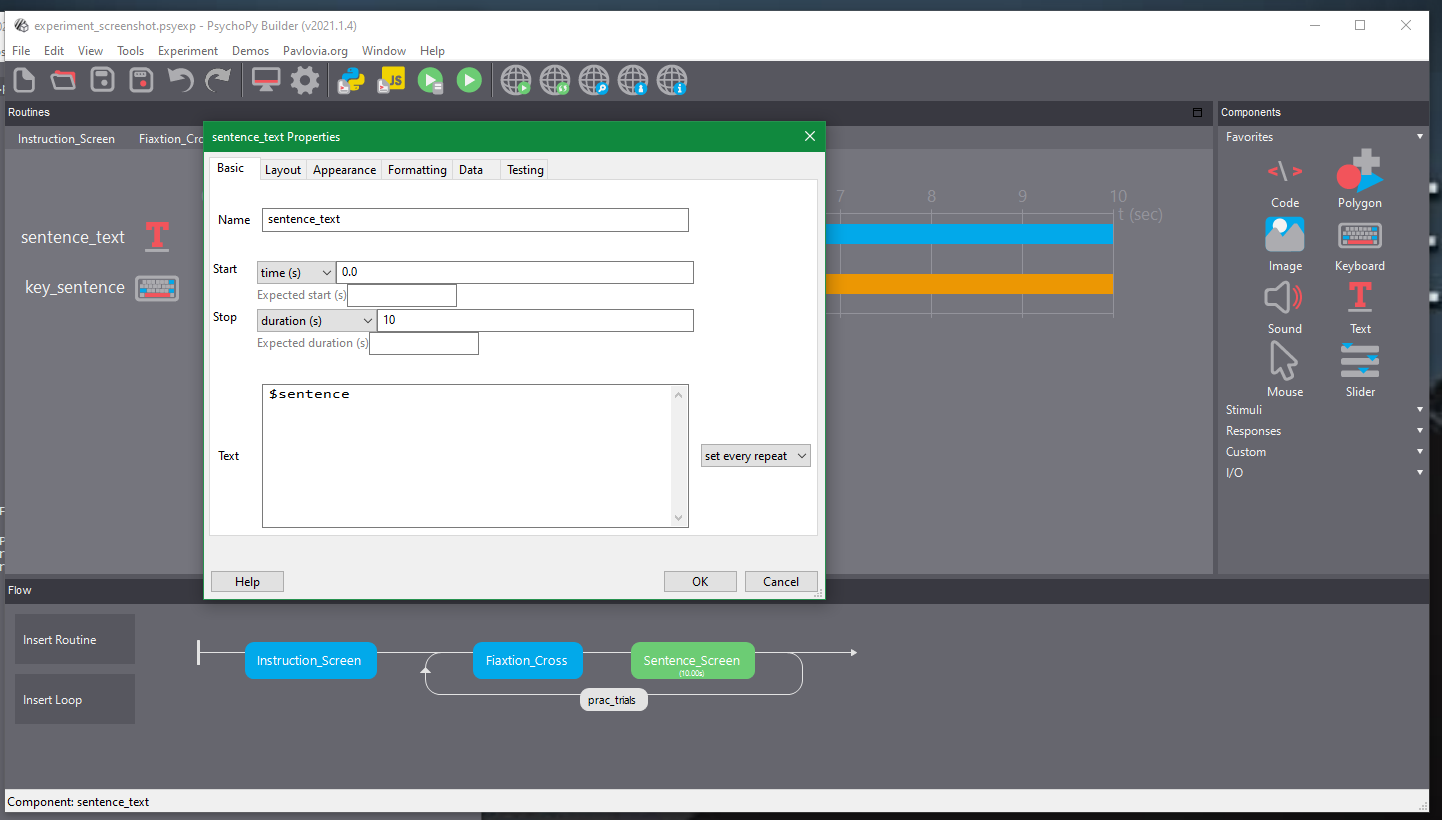

list_select = expInfo['list_select']

if list_select == "1":

row_select = "0:100"

elif list_select == "2":

row_select = "101:200"

elif list_select == "3":

row_select = "201:300"

elif list_select == "4":

row_select = "301:400"Overview

Using this guide we will build an psycholinguistic experiment in PsychoPy and learn how to get that experiment online using Pavlovia. We will integrate the PsychoPy experiment into Qualtrics, which will make the participant information, consent, and debrief forms more user friendly/reactive than if we had built them in PsychoPy. We will then learn how we can download our data from Pavlovia and Qualtrics for analysis.

Please note this guide is adapted from a workshop guide at the University of Sunderland for the module PSY260: Research Methods & Analysis in Psychology.

Experiment Background

We will build a study based on Glenberg, A. M. & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9(3), 558-565.

This study is an influential one in the area of embodied cognition. Some approaches to embodied cognition assume that language comprehension is not an amodal and abstract task, but instead we add meaning to language by simulating the events being described. For example, when we read the sentence “hang the coat on the hanger” we understand this sentence and judge it as sensible by simulating the actions being described. However, when reading “hang the coat on the teacup” comprehension is incomplete or the sentence is judged as being nonsensical as we cannot properly simulate an impossible action. More extremely, when we read action verbs such as “lick” and “kick” we see activity in the sensorimotor regions associated with these actions (i.e. activity in the brain for the tongue for “lick” and the foot for “kick”). It is thought that this activation adds meaning to language which improves comprehension. In short, language processing may be embodied in the sense that it relies on our prior and simulated physical experiences (Hauk, Johnsrude, & Pulvermüller, 2004).

This particular study by Glenberg and Kaschak shows evidence for the action-sentence compatibility effect (ACE). Briefly, it is thought that language developed to coordinate action. The ACE proposes that language processing primes action, such that any sentence that implies an action should facilitate similar physical movements in people reading about the action being performed.

In this study, Glenberg and Kaschak asked participants to judge whether sentences make sense by pressing a button. In one case, participants indicated a sentence made sence by pressing a button away from their body. Alternatively, they indicated a sentence was nonsense by pressing a button close to their body. (Or vice versa; an example of counterbalancing.) Glenberg and Kaschak found that when reading a sentence such as “close the drawer” – which implies movement away from the body – participants were faster to rate it as sensible than a sentence such as “open the drawer” if the sensibility judgment required moving their hand away from their body. This is because in the first case, the direction of movement implied by the sentence (i.e. away from the body) and the movement by participants to indicate it made sense (i.e. away from the body) matched. In the second case, the direction of movement implied by the sentence and the movement made by participants did not match. It’s thought that when the action and the sentence movement match, this speeds up processing of the language because the sentence primes participants to perform those actions.

However, recent articles call this landmark finding into question, with a host of replications showing no evidence for this action-sentence compatibility effect (Papesh, 2015). More recently, a pre-registered multi-site replication of the study by Morey et al. (2021) failed to find evidence for the ACE across 18 different labs. These findings undermine confidence in this behavioural method for detecting ACE effects. Caution must be applied when interpreting this as a critical blow to embodied cognition more widely, not only due to the wider evidence in support of embodied cognition using brain imaging methods, but because the items in the ACE experiments by Glenberg and Kaschak, Papesh, and Morey et al. show a great deal of variability between items. For example, Bergen and Wheeler (2010) found that grammatical aspect strongly influences findings. When sentences are progressive (e.g. John is closing the drawer/John is opening the drawer) are used, strong ACE effects are found. But, when sentences are perfect (e.g. John has closed the drawer/John has opened the drawer), no ACE effect is found. This may be because with progressive sentences the action is ongoing, which forces readers to focus on and simulate the action, while with perfect sentences the action has been completes, which forces readers to focus on and simulate end state of the event, and not the actions.

Your Task

Main Instructions

You are required to produce an APA formatted research report on embodied cognition. Specifically, you will perform a conceptual replication of the Glenberg and Kaschak (2002) experiment above. In this case, the current study will use items adatped from Papesh, slightly different response keys, and an online method (hence it is not a direct replication). You have two options for conducting the experiment:

Use the items we have given you, adapted from the Papesh study, and conduct a conceptual replication of the Glenberg & Kaschak study with no changes.

Change the items to use only progressive sentences so that the items are consistent and more likely to produce an ACE effect. You can do this by exploring the items in the Appendix of Bergen and Wheeler (2010).

Please limit your changes to the experiment to adapting the items only. Do not add any other variables.

The original study explored main effects of response direction (sensible is near vs. yes is far) and sentence direction (movement towards vs. movement away) and their interaction. This allowed Glenberg and Kaschak to determine if when responses by participants match the implied movement of the sentence this speeds up language processing. This is the main test of this hypothesis.

The Experiment

The experiment will display a fixation screen prior to individual trials which will then present participants with a sentence on screen which is either (a) sensible, or (b) nonsense, and which in case (a) implies either a movement (i) towards the participant or (ii) away from the participant. Participants will indicate whether sentences make sense by pressing a button towards or away from themselves. We will design this study so that it has two phases whereby participants indicate a sensible sentence using each direction of movement respectively. We will also counterbalance the items using various lists such that confounding variables are controlled.

We will provide you with the items for use with this guide.

Minor Details

The version of the study we produce will show participants:

A screen displaying a fixation dot to attract participants’ attention to the centre of the screen before seeing a sentence. To progress to the next screen, participants must press the Space bar on the keyboard.

A screen showing a short sentence. To progress to the next screen, participants must make a choice as to whether the sentence is sensible or not using the w or s key on the keyboard.

Before these screens, participants will see instructions on how to respond to sensible or nonsense sentences using the w and s keys on the keyboard and how to progress from a fixation dot by pressing the Space bar.

Participants will take part in a short practice phase of four fixed items, two of which are sensible and two of which are nonsense sentences. There are four lists of experimental items made up of sensible and nonsense sentences. These four lists counterbalance the direction of transfer of the sentence (i.e. towards or away from the participant) and whether or not a correct response requires a w or s key-press. An example of the four versions of item 1 is presented below:

You sold the land to Hakeema. (sensible response is w)

Hakeema sold the land to you. (sensible response is w)

You sold the land to Hakeema. (sensible response is s)

Hakeema sold the land to you. (sensible response is s)

Participants will thus see one version of each item. The items will be split in two blocks and presented to participants separately. In one block, a sensible sentence will require a w key-press, and in the other block a sensible sentence will require an s key-press. Across items, participants will see both sensible and nonsense sentences. Thus, participants will see all conditions (i.e. this is a within-subjects design), but they will not see all conditions for all items (i.e. this is a between-items design).

Using this Guide

To build the experiment we have broken down the process into a series of step-by-step instructions. These instructions are presented as full paragraphs with key details in bold text. Important steps are further illustrated with annotated images. Try to follow the guide to build the experiment.

Building the Experiment

Getting Started with Psychopy

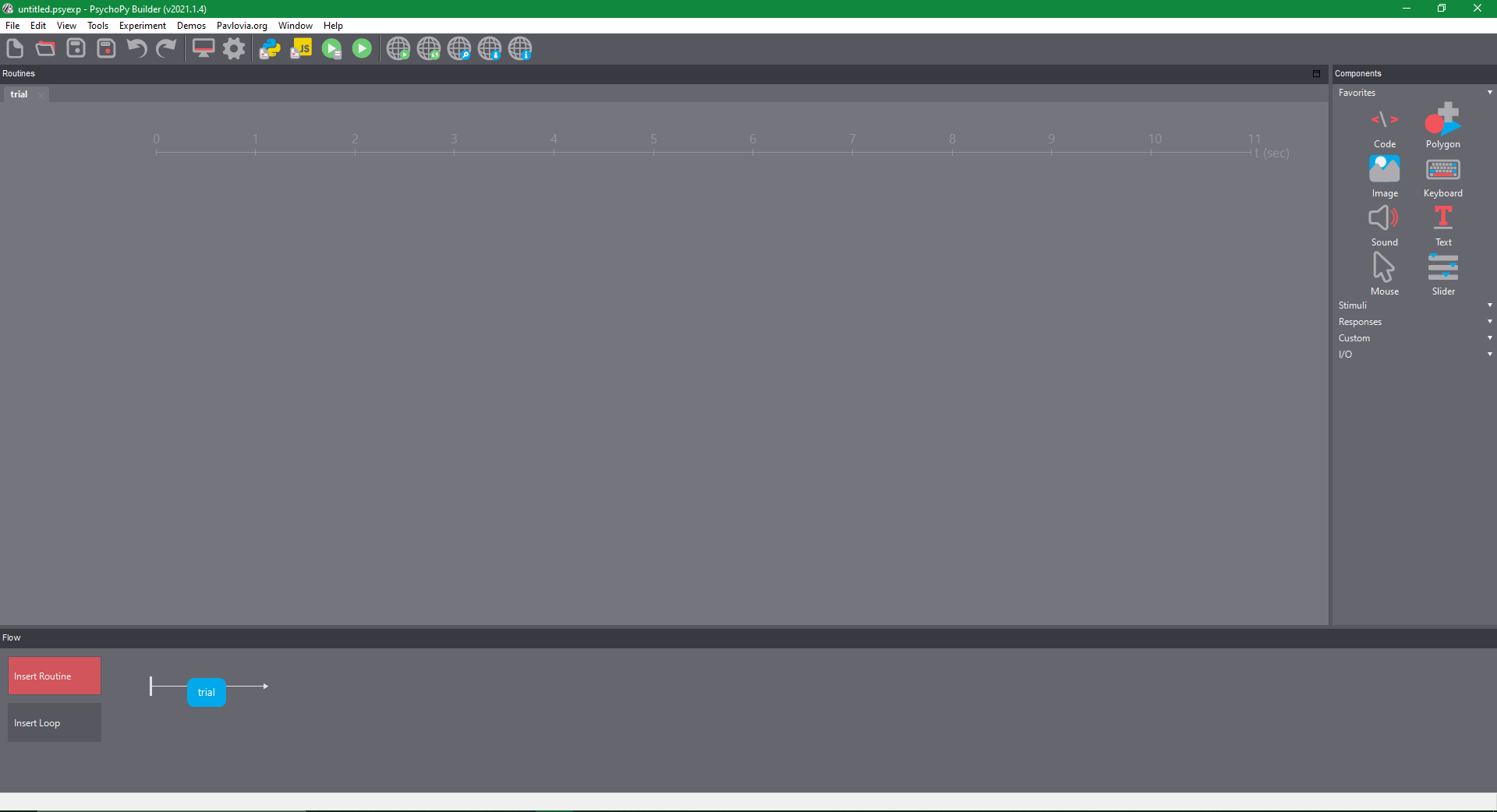

1 - The default project.

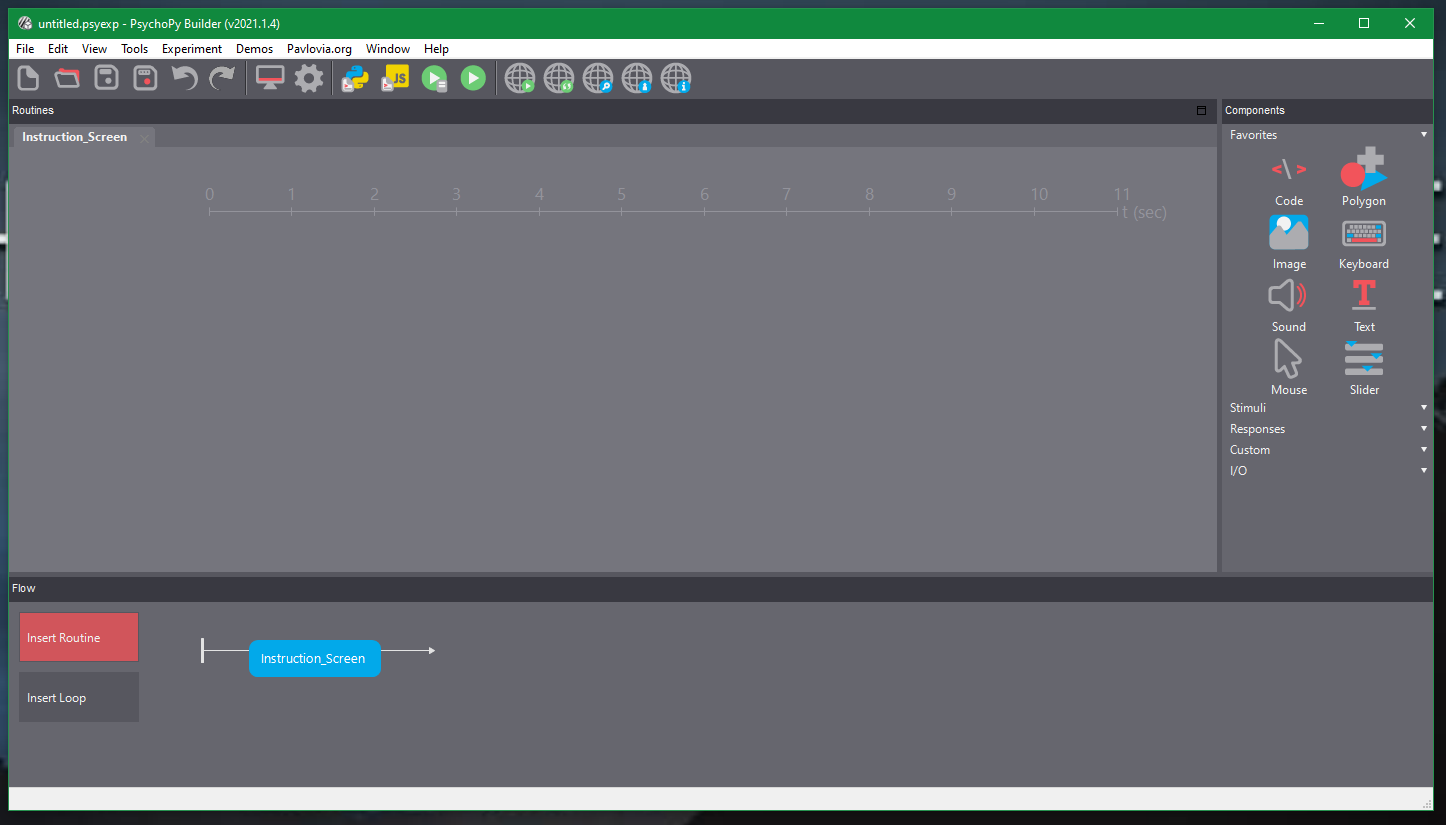

The programme opens onto the builder view, with only one trial screen present which contains no components. There are four components/panels to the builder view that you will be using:

Actions: This is at the very top of the PsychoPy builder and allows you to run the experiment both online and offline, save the experiment and generate coding scripts, amongst other options.

Routines: This is the main window of the PsychoPy builder where the components for each screen will be stored, showing the type of component along with its duration and order.

Components: This is to the right of the Routines panel and is where the various components (such as text, image, and sound) are stored and can be added to various trial screens.

Flow: This is below the Routines panel and is where the trial screens are shown in order of presentation. This allows you to add loops to the study. Loops determine how screens should change for a given trial. For example, if we have 60 trials in a study which simply display a different word on screen to the participant on each trial, we could insert 60 pages. But to save time (and to allow randomisation of trials) we can instead define the words that should be inserted on a page and tell the study to loop through these, displaying them sequentially in the same way for every trial.

Constructing the Basic Study

We will build this experiment to work online. This is easiest if you save the experiment to its own folder on your desktop. Create a folder on your Desktop called PSY260_Experiment. Save your experiment to this folder using the Save icon in the Actions panel.

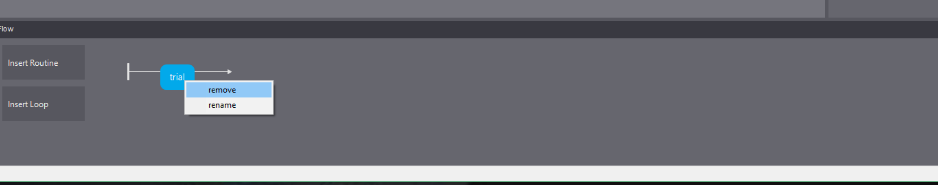

Before we get started on building the experiment, we will delete the default routine on the timeline (called trial). To delete it right click on ‘trial’ and select remove. This is allow us to start from a completely blank experiment. We will now add some instructions to our experiment.

2 - Removing the trial routine.

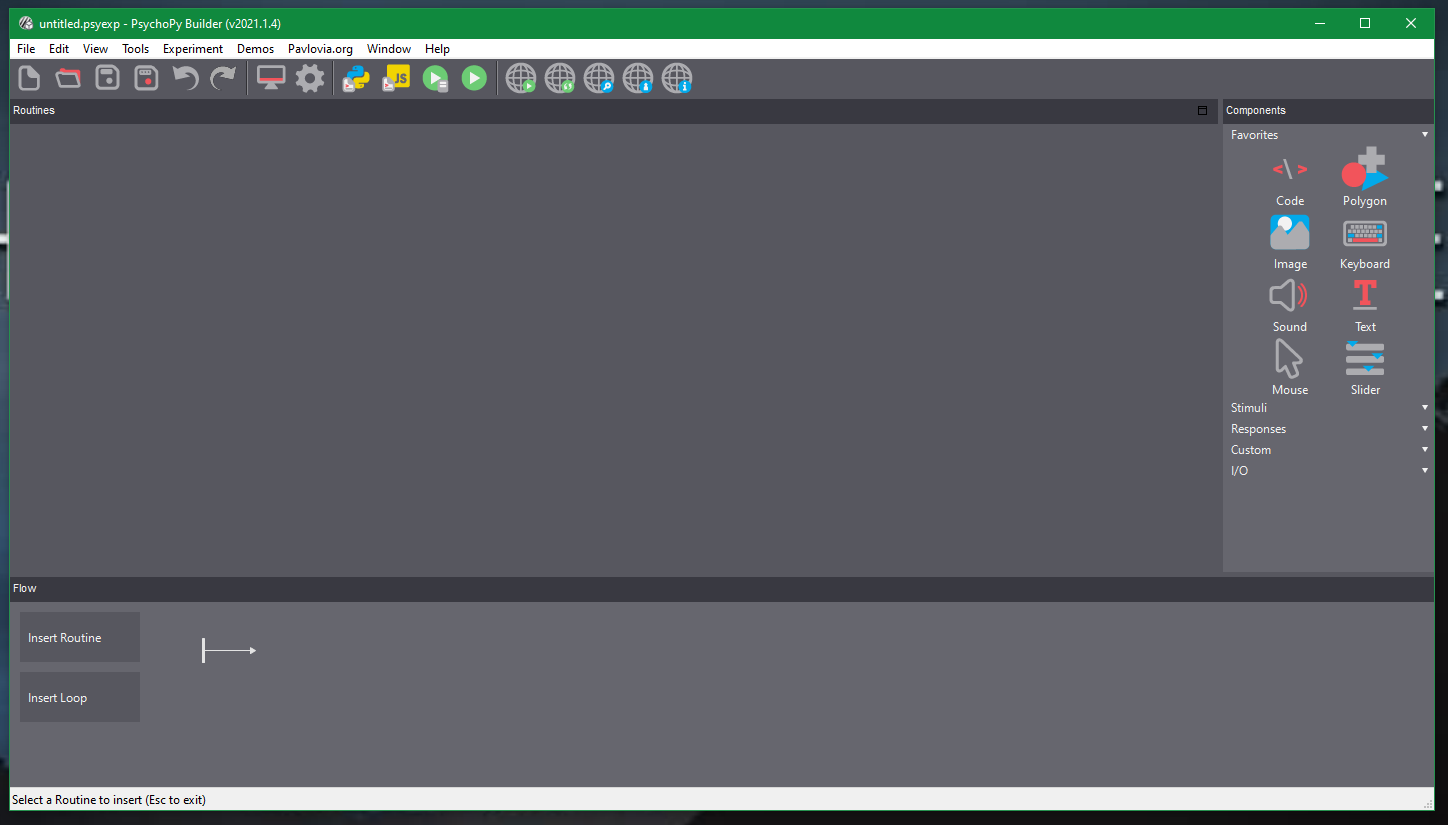

In the Flow panel click on Insert Routine and then (new).

3 - A blank project.

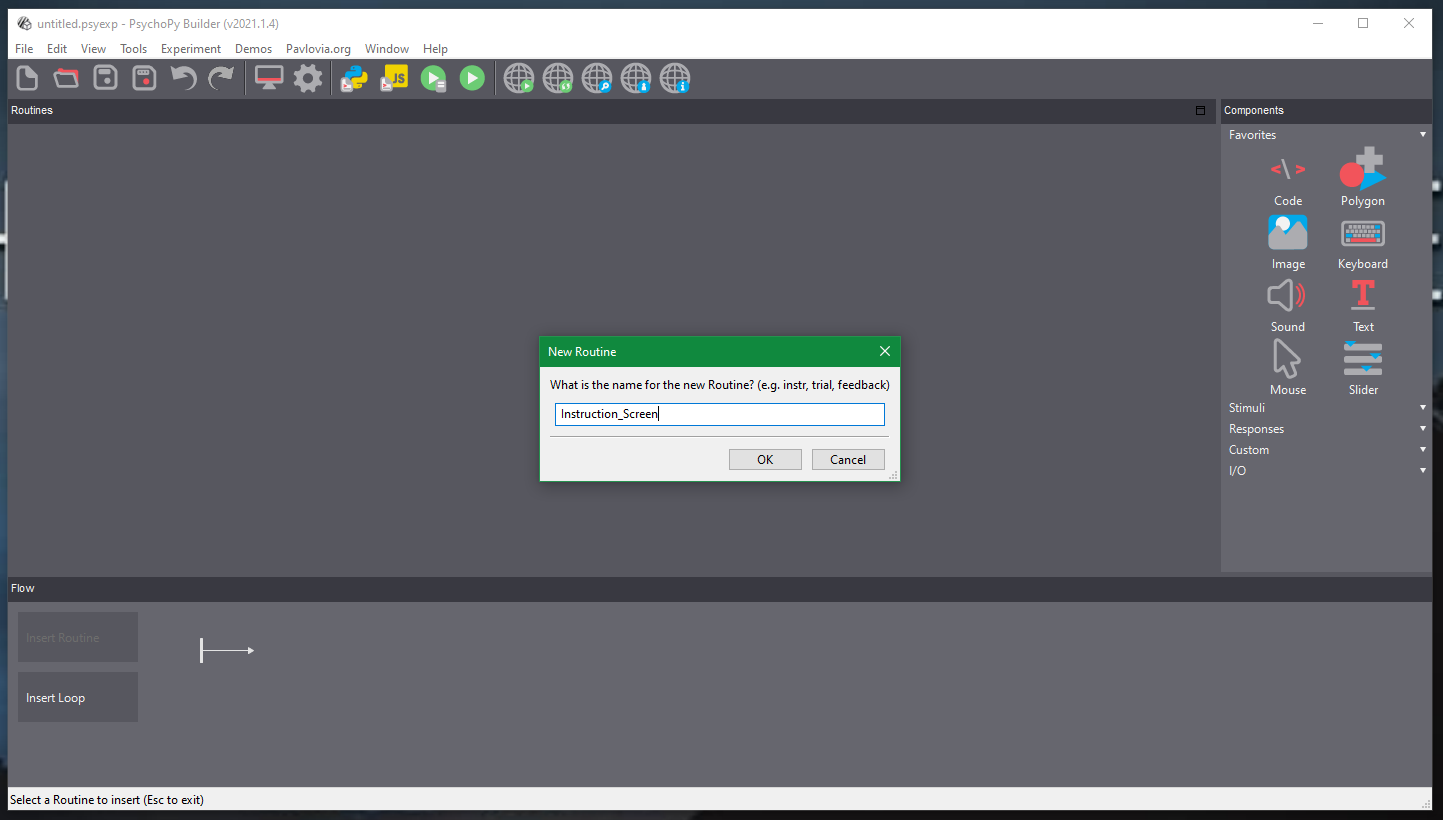

Name the new routine Instruction_Screen.

4 - Renaming the Routine.

Click the dot that shows up on the timeline to add the Instruction_Screen to the beginning of the timeline. This will add a tab along the top of the Routines panel called Instruction_Screen. Click the tab to make sure we will add components to the correct screen.

5 - The Instruction_Screen routine added to the timeline.

Next, we will add some text to our new Instruction_Screen routine. For now, this will just be some placeholder text that we will replace once we’ve added the core components to the study.

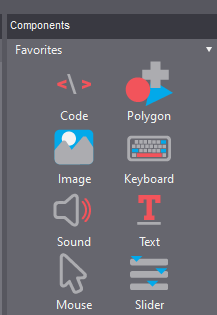

First, click on the Text Component from the Stimuli tab in the Components panel. This will bring up some options in a window.

6 - Components options.

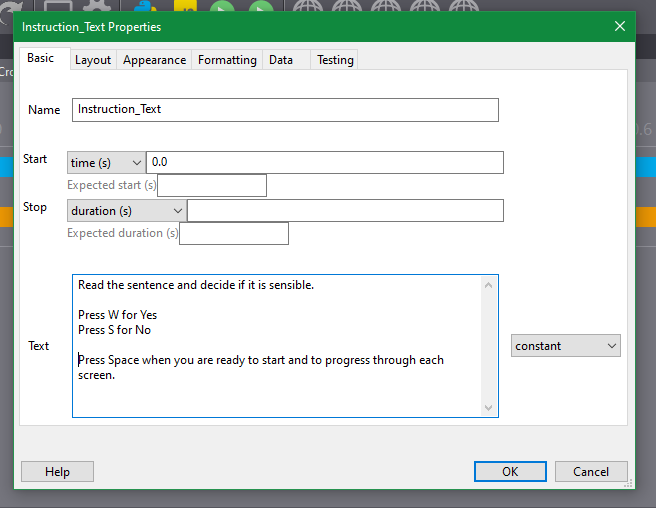

In the text Properties window under the Basic tab change the following:

Name from text to Instruction_Text. This will allow us to identify out instructions amongst other components later on.

duration (s): delete the default value, leaving this section blank. This will ensure the instructions are always on screen, allowing participants as long as they like to read them. Later we will add an option for them to move on from this screen through a button press.

Text: Change this to “Our instructions”. This is just placeholder text for now. We will add full instructions later.

7 - Changing the basic text properties.

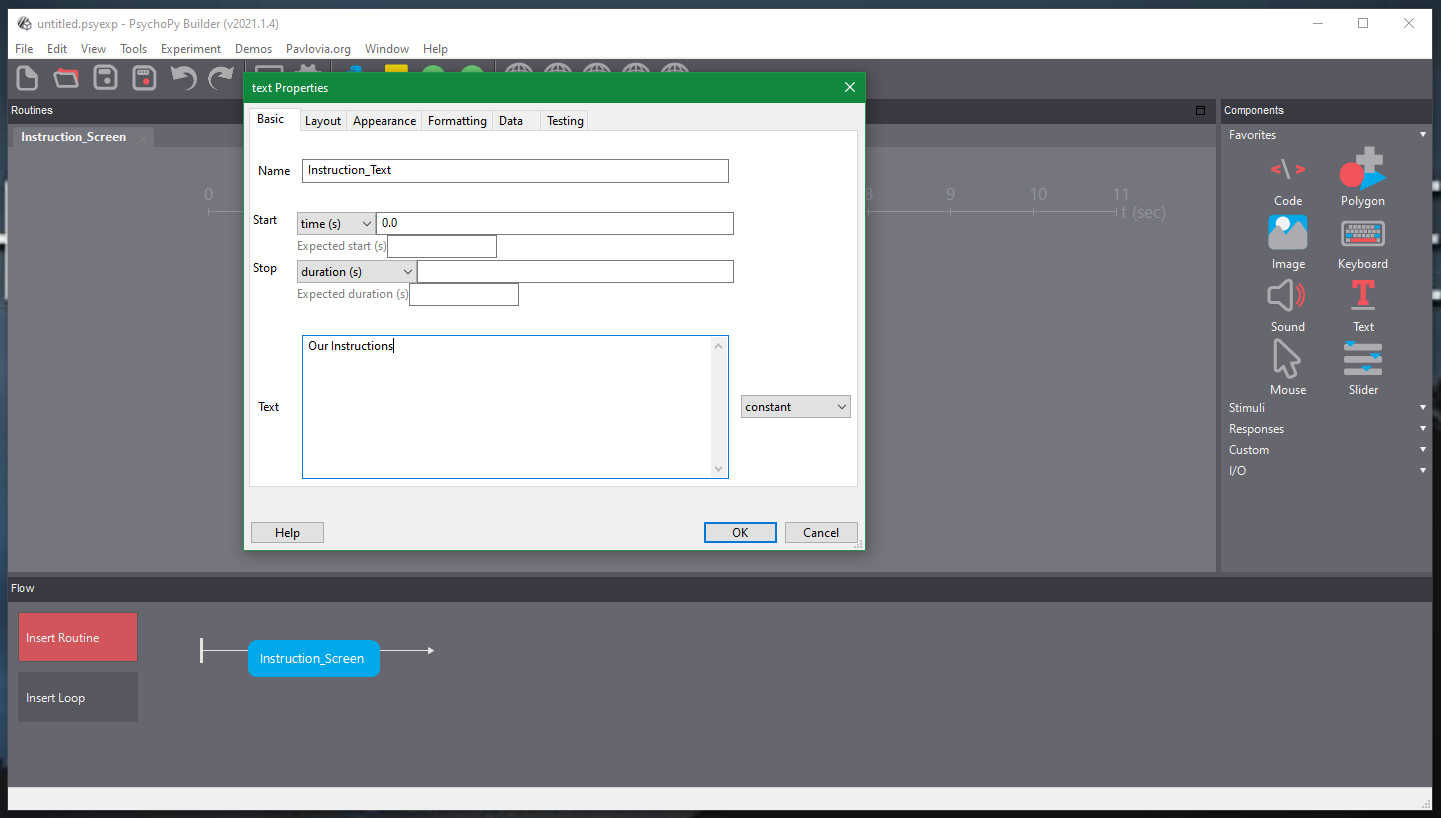

8 - Click on the Formatting tab. We will make the text a little bigger by changing the letter height to 0.04. This is a relatively good size for the text on the screen, but you can make it larger or smaller if you prefer. (If this doesn’t look right to you, adjust the value accordingly.)

Currently, there is no way to move on to another screen. The instruction screen will just show forever until you quit the experiment. We will add a Keyboard component that will allow participants to press a button to move past this screen.

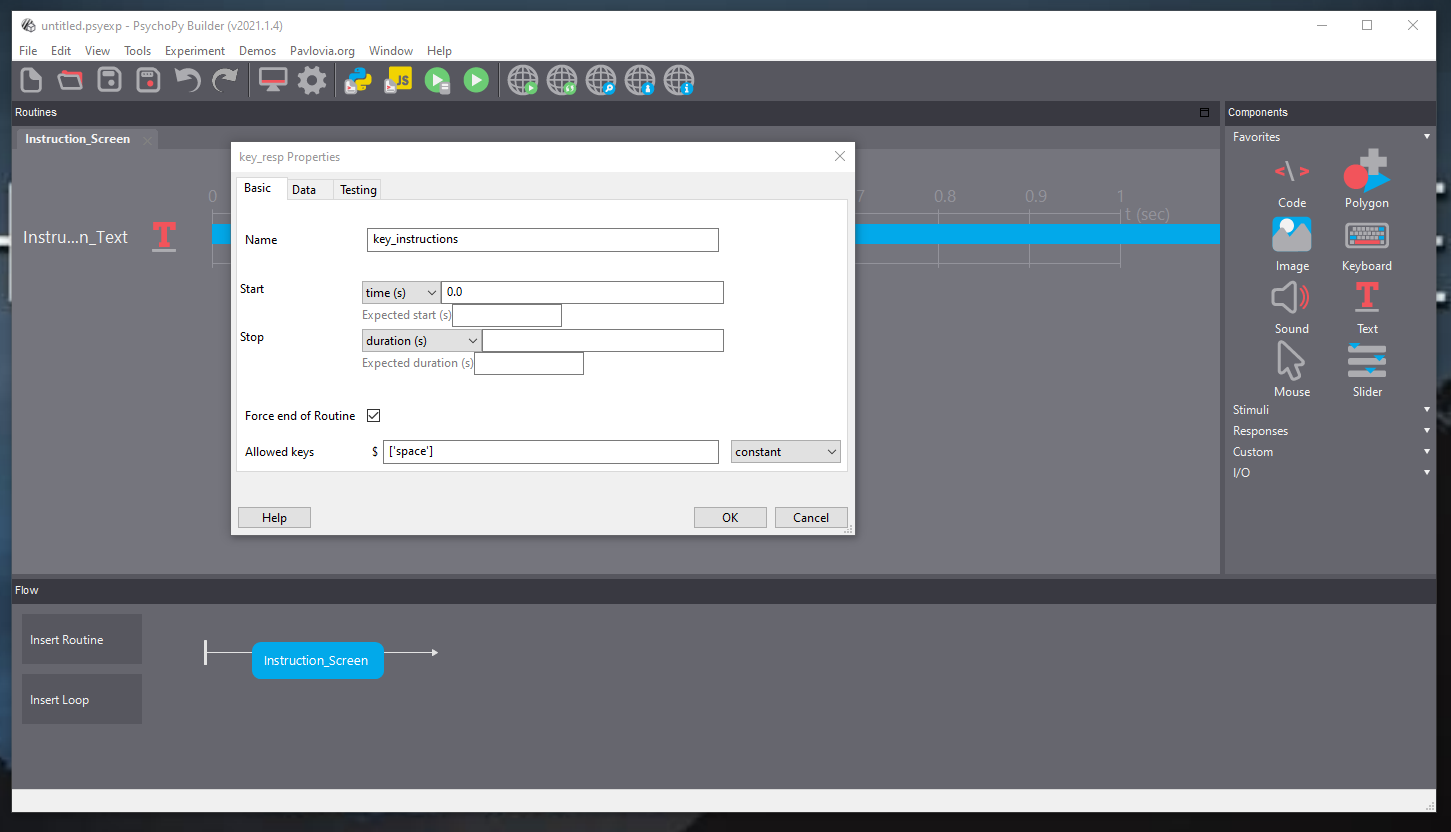

First, select Keyboard from the Component menu.

Space to move on once they are done. We will change within the keyboard component its name (key_instructions), the allowed keys ([‘space’]) and what it stores (nothing).

9 - Component options.

On the Basic tab, change the name to key_instructions and allowed keys to [‘space’]. This will ensure that we can identify this key response amongst others once we collect data in the study. Changing the allowed keys to space will ensure participants can move on from the instructions screen only by pressing the space bar.

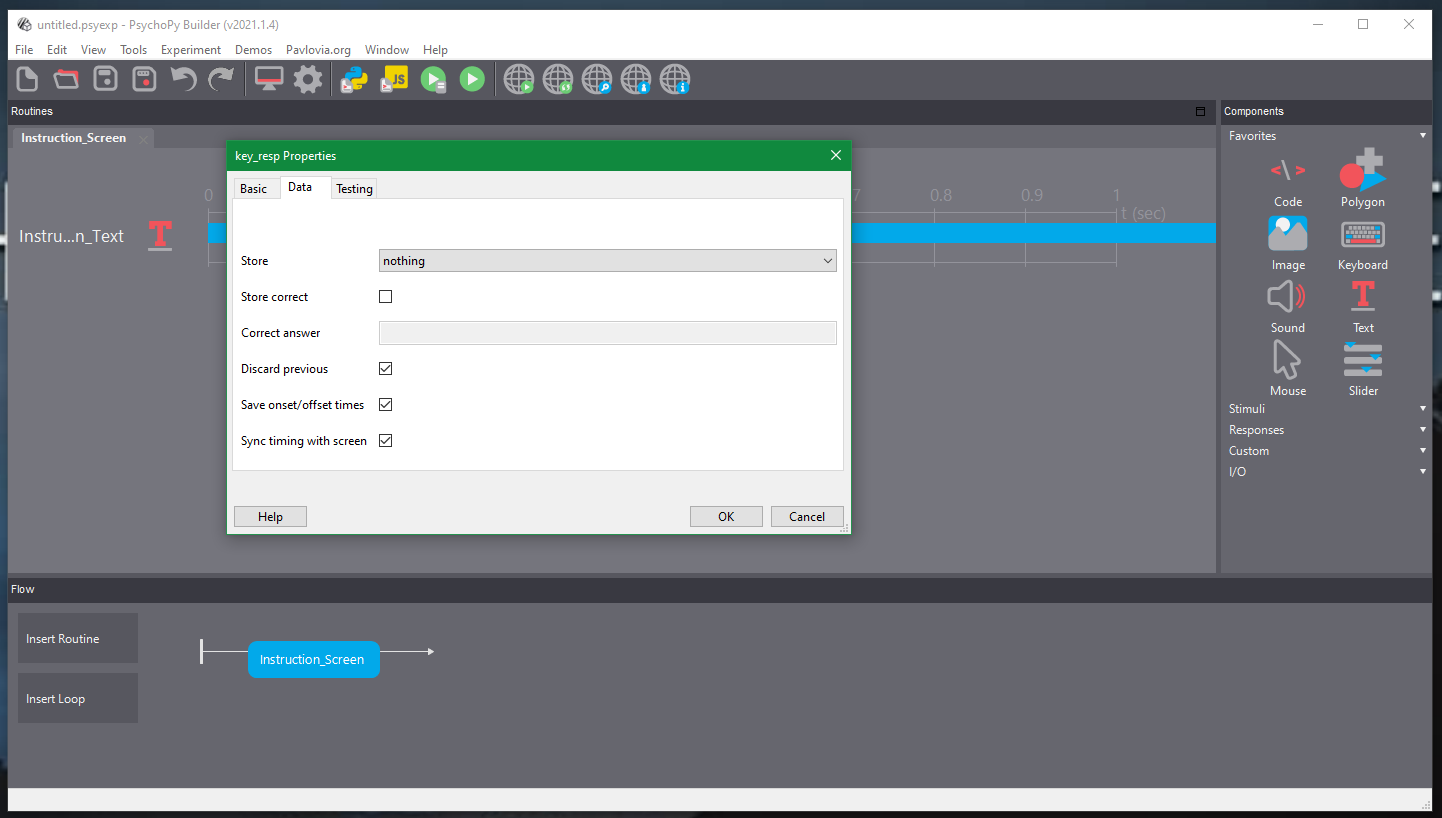

On the Data tab, change the Store option to nothing. This will ensure we don’t collect any data from participants pressing the keyboard on this screen as it isn’t useful for our analysis.

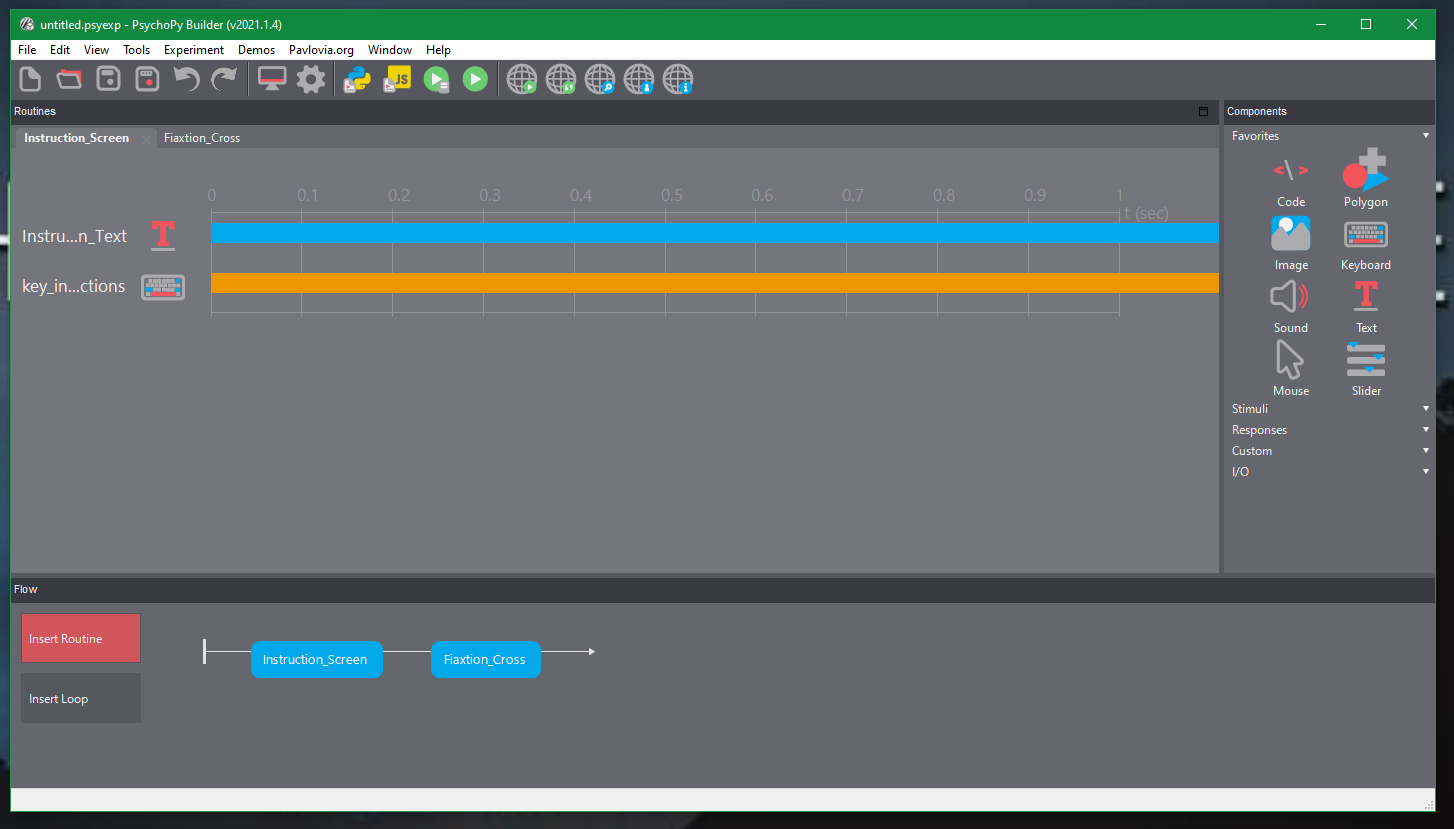

Currently we have one screen set up, which displays some text and waits for participants to press the space key to move on. That’s all we need for the instructions for now. We can move on to creating the trials.

We will first make a fixation cross on the screen. This is a screen that ensures participants pay attention to the middle of the screen before we show them our linguistic simuli. This control over attention is important if we want to collect reaction time data.

Click Insert Routine again, select (new) name it Fixation_Cross and click on the dot on the timeline after the Instruction_Screen. This will place the fixation dot screen after the instructions.

10 - Adding a fixation cross routine to the timeline.

Click the Fiaxtion_Cross tab in the Routines panel to ensure we will add components to the correct routine.

Add a Text component from the Stimuli tab in the Components pane just like you did for the Instruction_Screen.

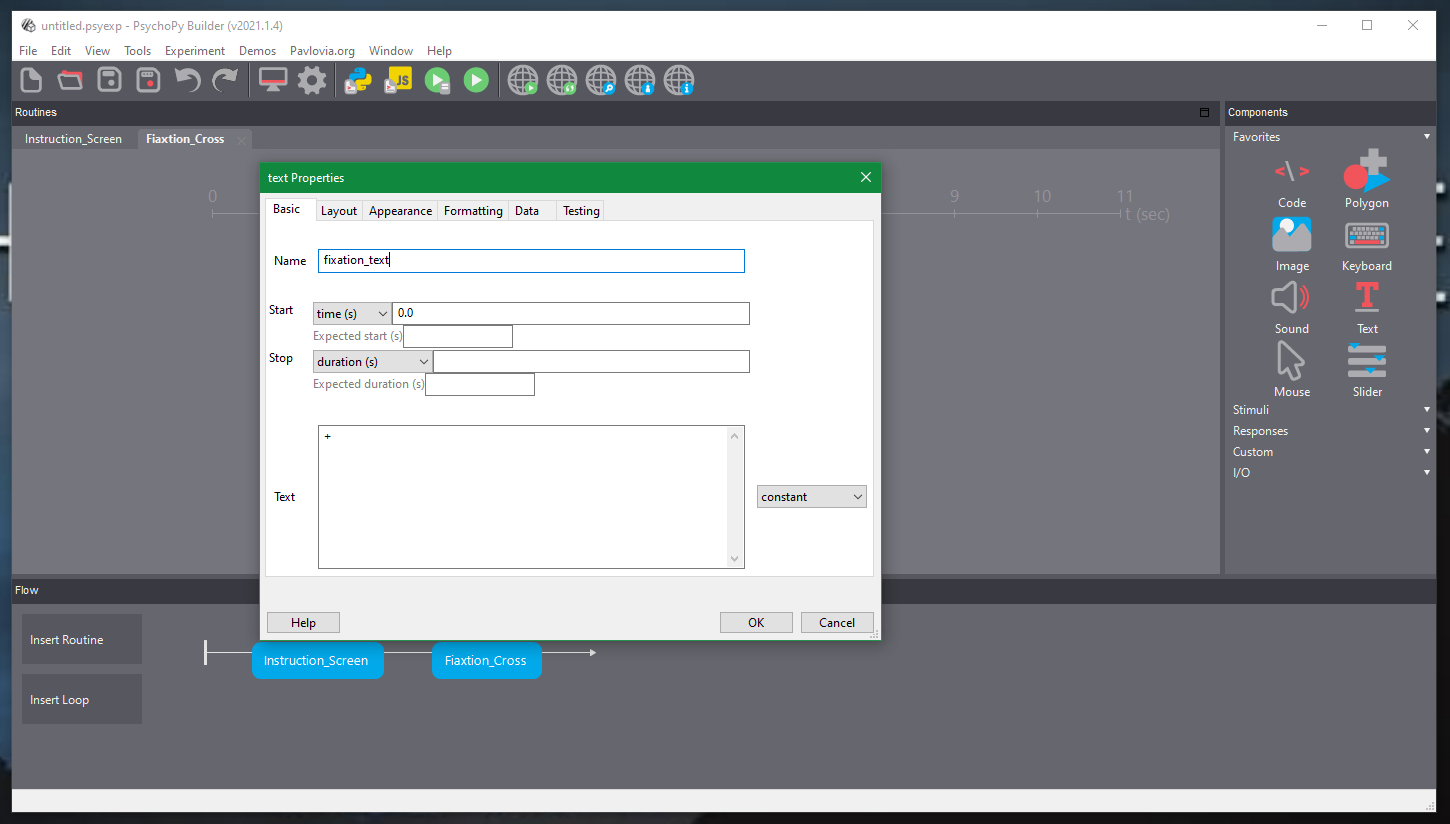

We are going to add a + symbol to the screen to act as a fixation cross. In the text Properties window under the Basic tab change the following:

Name: change this to fixation_text.

duration (s): delete the default value, leaving this section blank.

Text: change this to the plus symbol, +.

11 - Changing the fixation text.

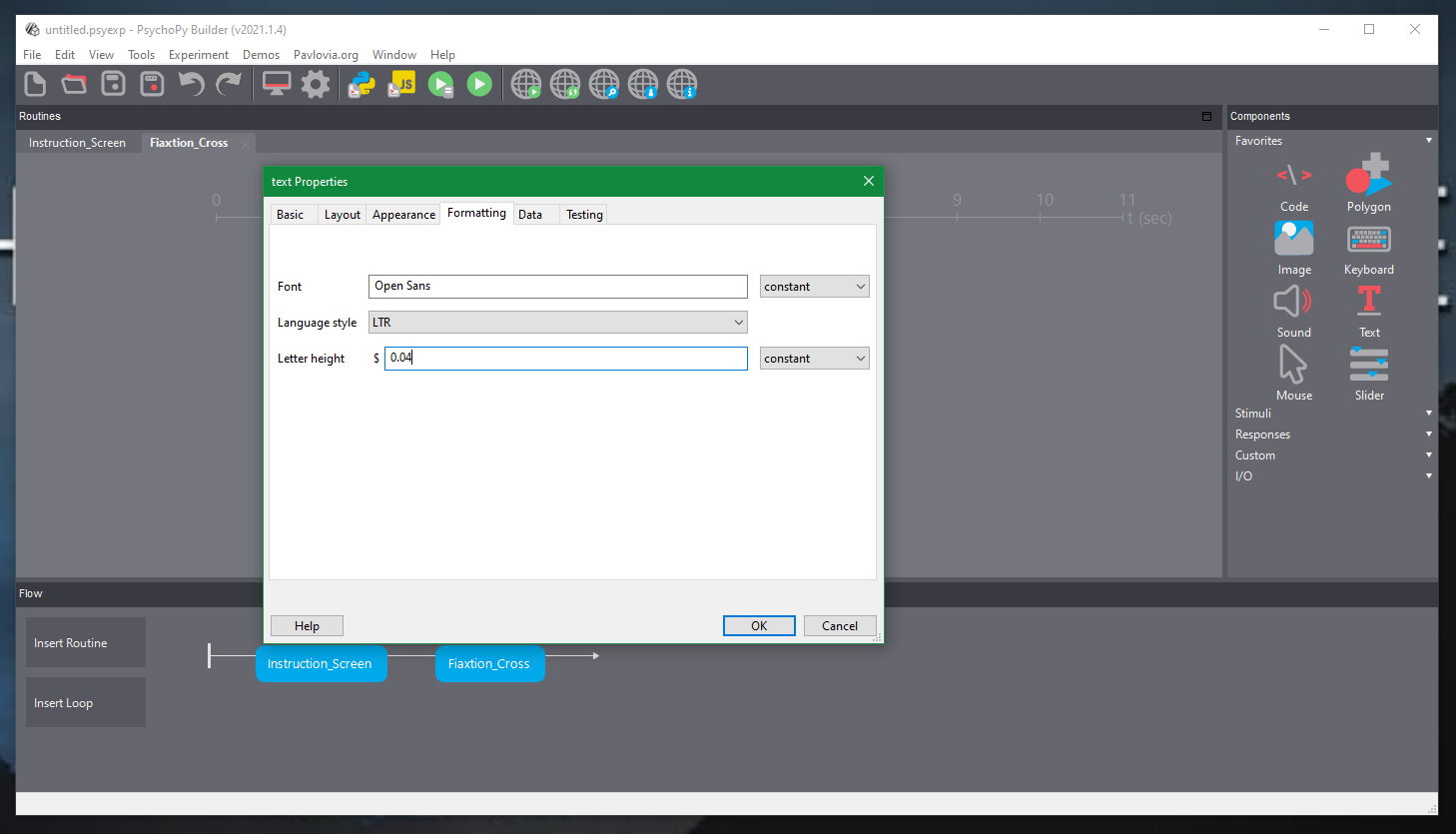

On the formatting tab, change the letter height to 0.04.

12 - Changing the letter height.

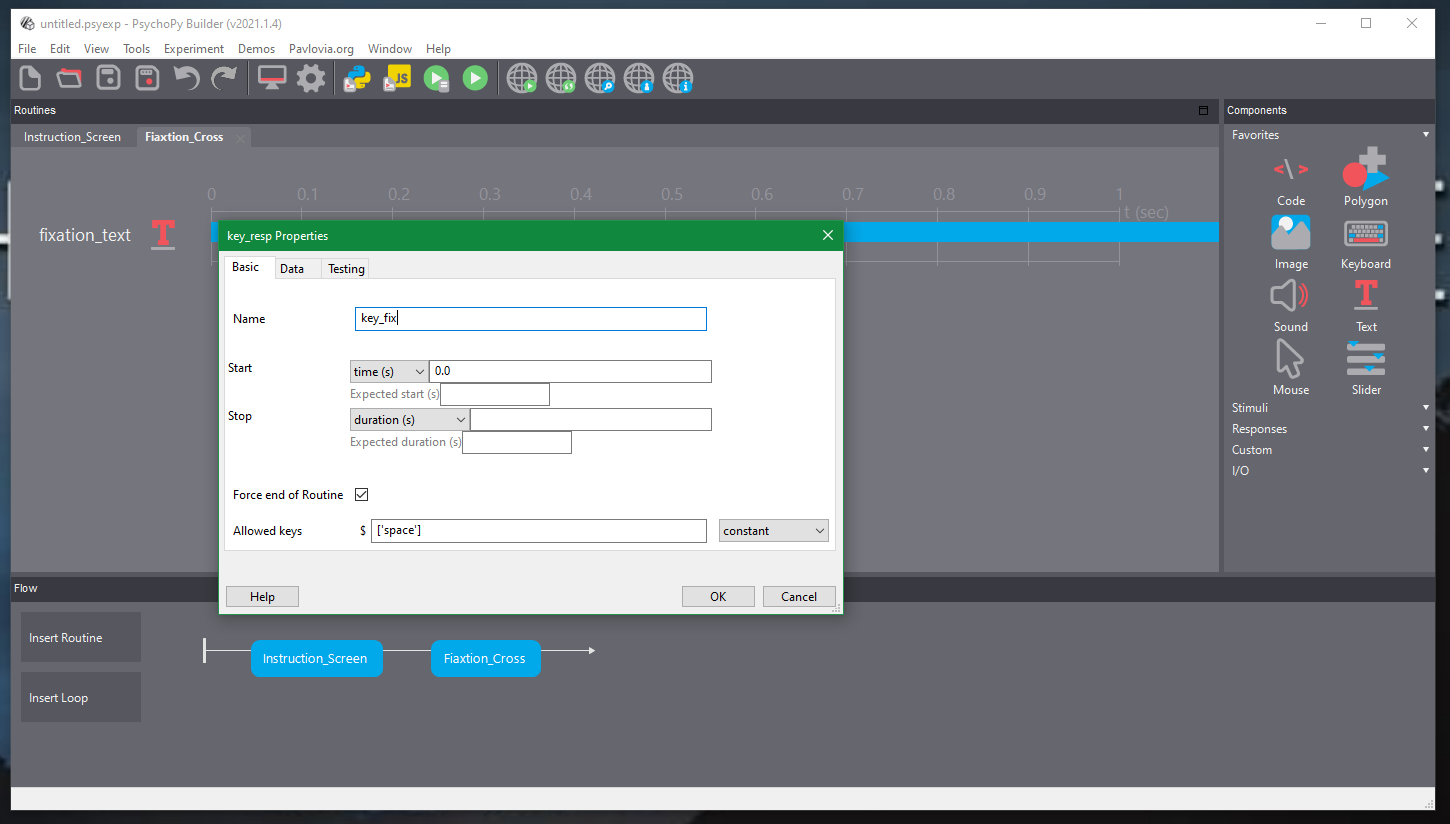

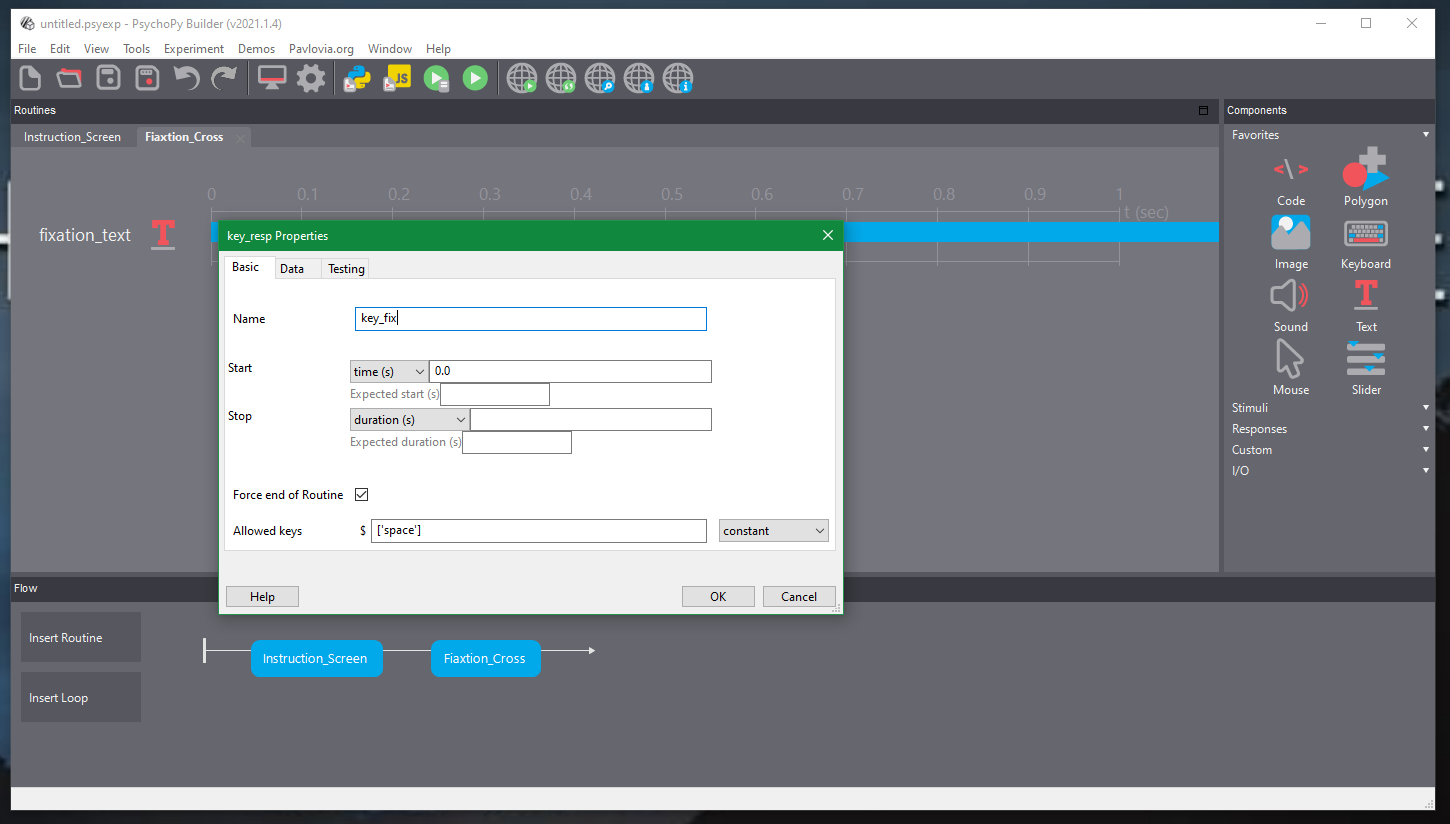

Next we’ll add a Keyboard component allowing participants to progress to the next screen by pressing the space bar.

Select the Keyboard component from the Responses tab in the Components panel. On the Basic tab, change the name to key_fix, and allowed keys to [‘space’]

13 - Changing the keyboard details.

On the Data tab, change the Store values to nothing.

14 - Changing the data storage options.

Next we will ake the trial screen. This screen will contain a sentence which participant will have to read before responding to whether the sentence makes sense or if it is nonsense. Participants will do this using either the W or S key on their keyboard. Which key they will be using will change halfway through the experiment, so for right now we will set up a placeholder screen so that we can test the flow of the experiment before adding all of the details.

Add a new Routine and name it Sentence_Screen and place it afer the Fixation_Cross on the time line.

Add a text component from the Stimuli tab in the Components panel.

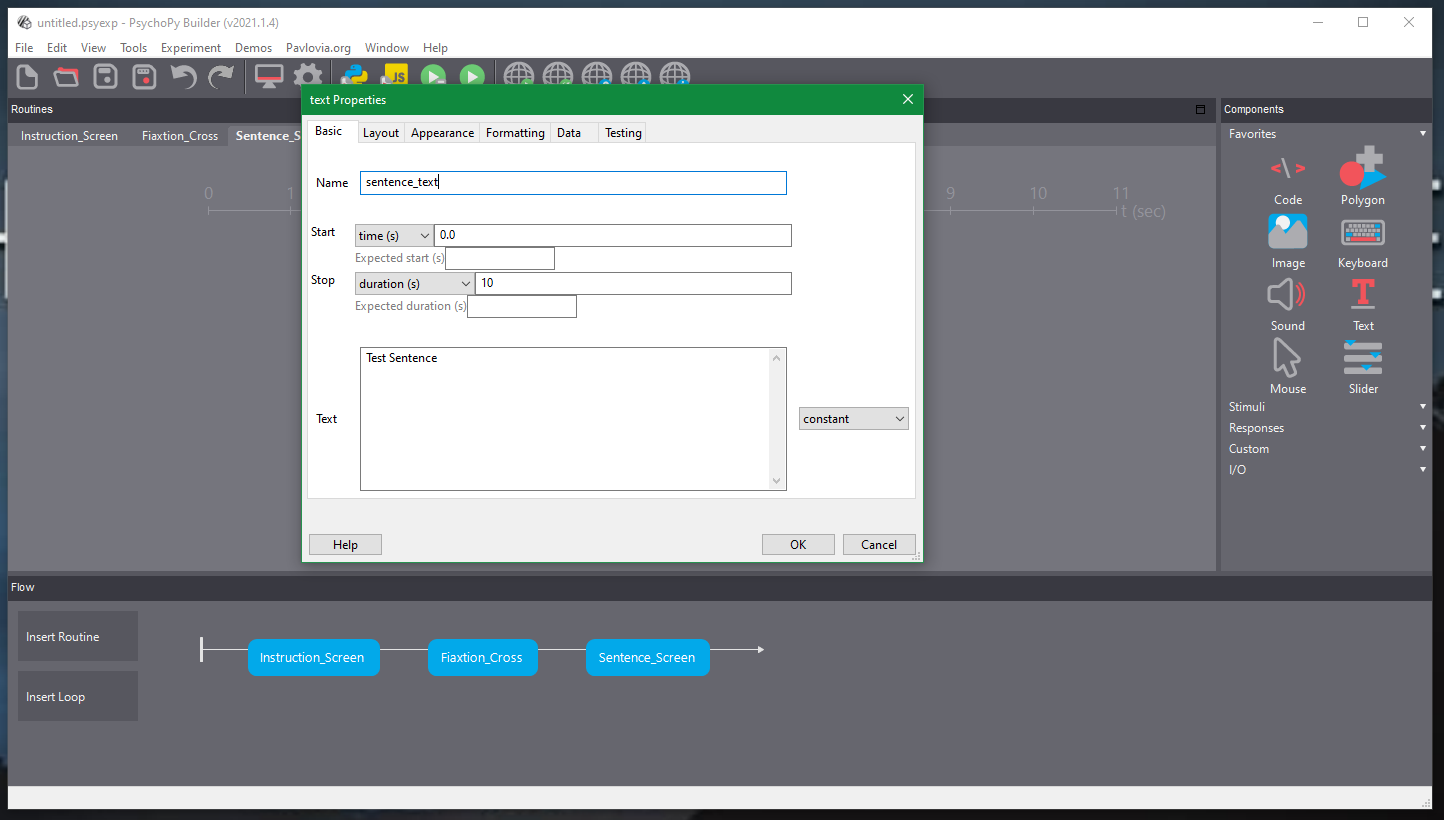

On the Basic tab, change the following:

Name: change this to sentence_text.

duration (s): 10.

Text: change this to Test Sentence. This is placeholder text that we will change once we have finalised the structure of the experiment.

15 - Editing the text details.

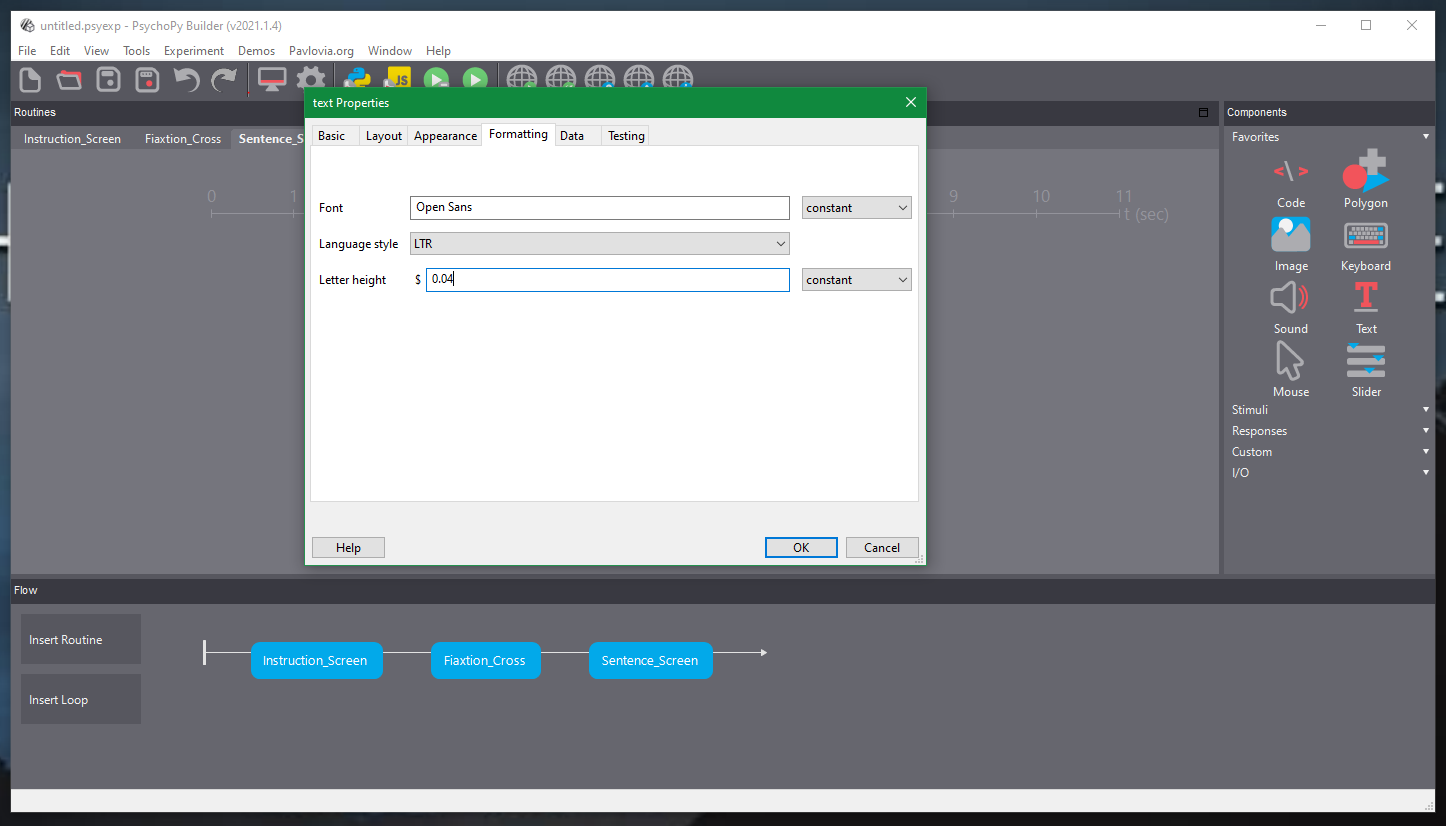

On the Formatting tab, change the letter height to 0.04.

16 - Changing the text formatting.

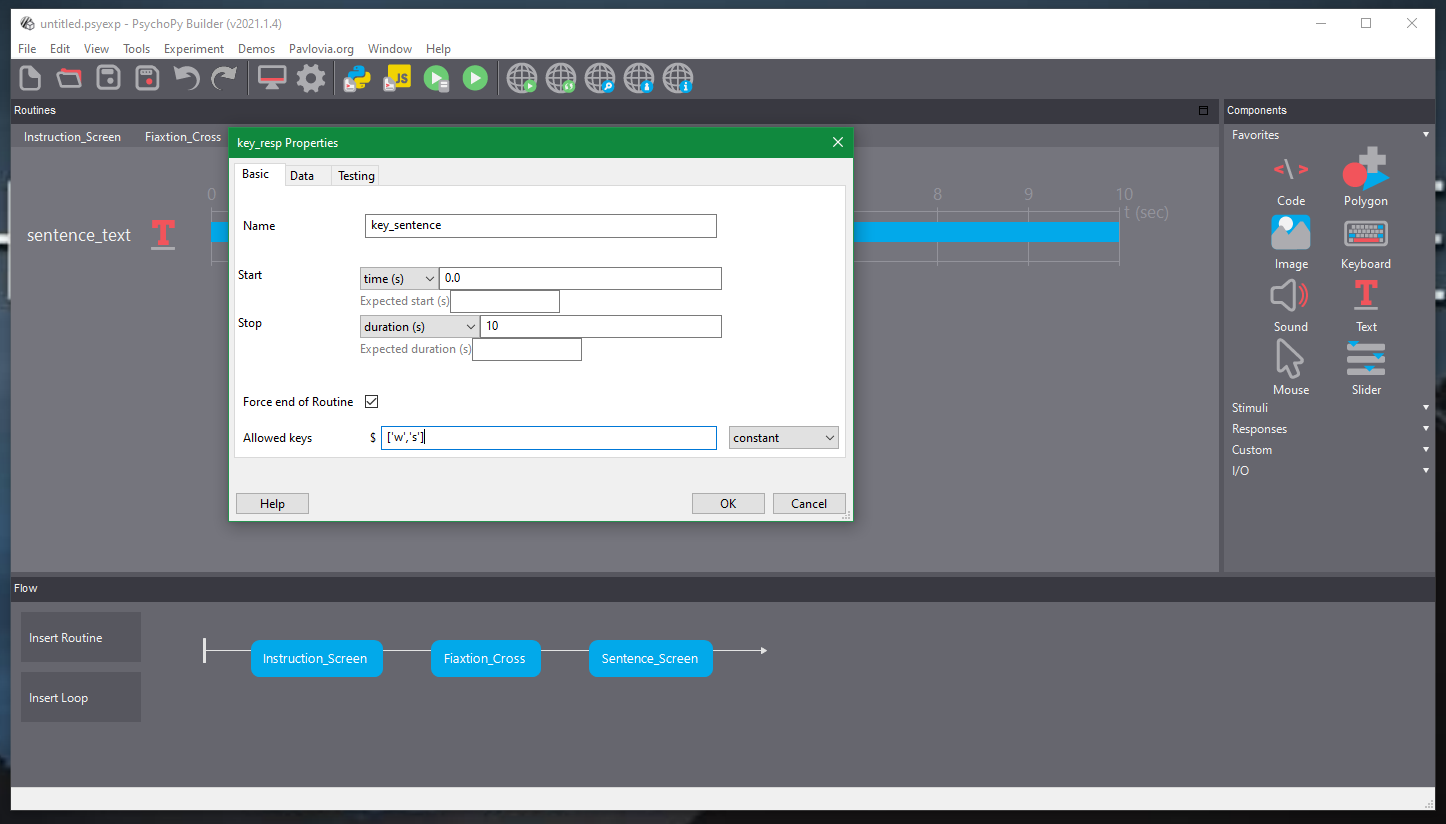

Add a keyboard component. This will allow participants to press the W or S keys to progress the experiment from the sentence screen.

On Basic tab, change the following:

Name: change this to key_sentence.

duration (s): change this to 10. This will make the sentence appear on screen for at most 10 seconds.

Allowed keys: change this to [‘w’,‘s’]. This will make it so participants can only progress from this screen if they press the W or S keys on their keyboard.

17 - Changing the keyboard details.

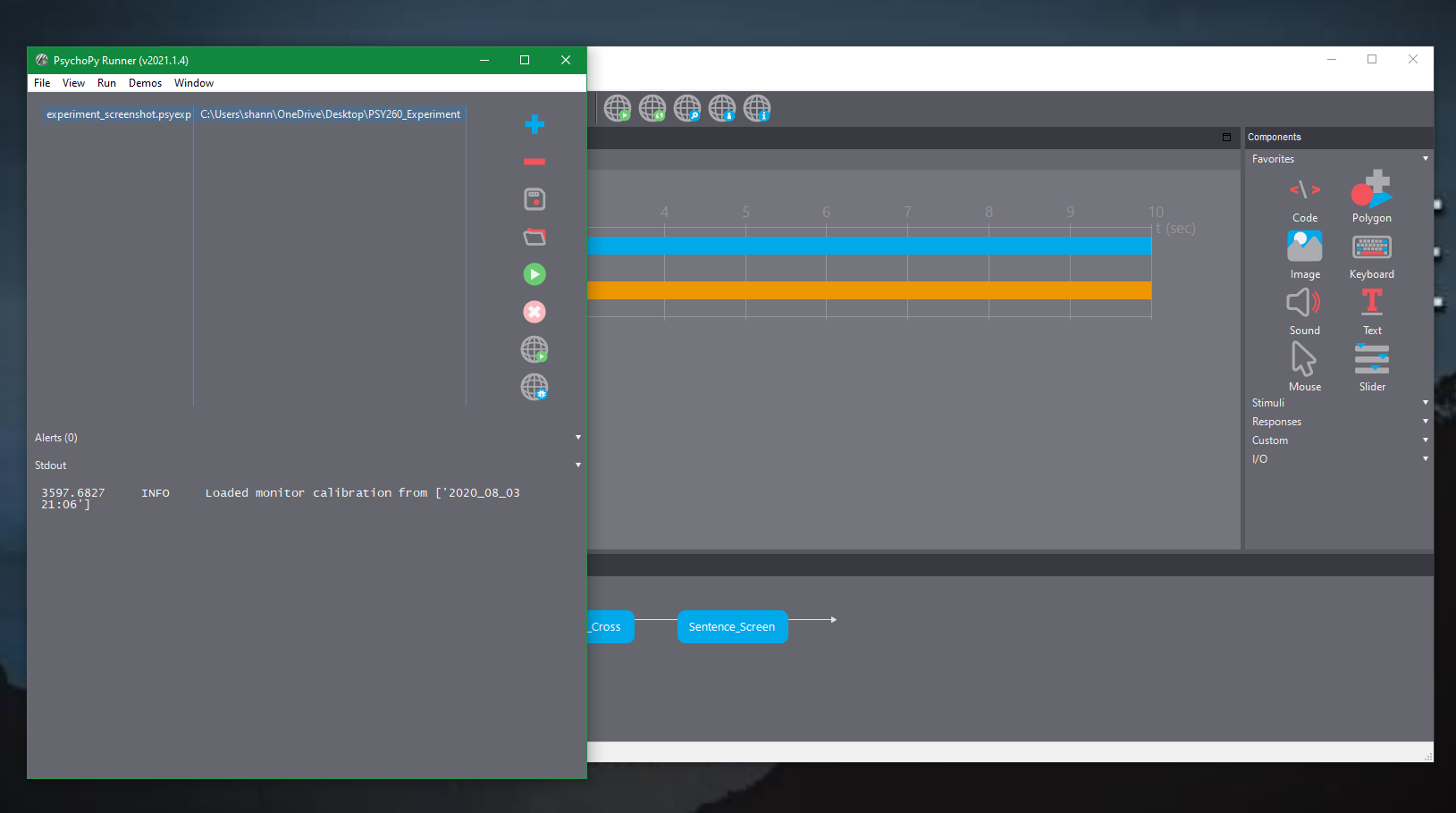

Now we should save the file and test that is runs the way we expect it to.

We can now run the experiment to check that everything is working as we have set it up so far, to do this we are going to run it offline first.

If you’re a Mac user, you first need to make sure your Operating System allows keyboard responses to be monitored by PsychoPy. To do this, search for Security & Privacy on the Mac or alternatively go to Settings, and then Security & Privacy. Within this screen, scroll down to Input Monitoring. Click the padlock to allow changes to your preferences. Then tick the box next to the PsychoPy logo. This gives PsychoPy input monitoring rights. Your screen should now look like this.

18 - Giving PsychoPy input monitoring privileges on Mac OS.

To test the experiment we will click the first green play button with the grey box on it. This will send the experiment to the runner programme.

19 - Testing the experiment using the play button.

Within this screen press play.

20 - Press play to test your study.

You will see a popup screen asking you to input some information for the participant. Just type the number 1 in here and click OK.

You will see the sentence Our Instructions. Press the space bar to move past this screen.

You will see a + symbol. Press the space bar to move past this screen.

You will see the sentence Test Sentence. Press W or S to move past this screen.

Now that we know the flow of the experiment is working, we need to make it show participants every sentence in our items list. We could add as many screens to the experiment as we have in the list, but this will involve a lot of repetition and won’t allow us to randomise the order of presentation for the sentences.

Instead, we will use a loop. Here, we create a single screen. We then make sure that the sentence shown on screen is selected from our list of items by using a variable. Our loop then ensures that we display this screen enough times to show every item from our list. This means managing our study is easier because we only have one screen to deal with if, for example, we want to change the font size. (That certainly beats changing 100+ items by hand!)

Change your font sizes as appropriate if this looks strange on your display.

Customising the Experiment

Loops and Lists

There are two files on GitLab called list_block_1.csv and list_block_2.csv. Please download and save these in the experiment folder you created at the beginning of this guide.

In the final study, participants will see 200 items in total, split evenly across 2 blocks of 100 items. In the first block, a sentence must be responded to as sensible or nonsense using the W or S keys. In the next block, we will change the keys around to ensure that any effects we find aren’t due to preferences for pressing one key over another. Across the files, we also vary whether a transfer sentence indicates transfer towards or away from the partipant. So, for any given transfer sentence it can either have W or S as a sensible response and transfer towards or away from the participant. This leaves us with 4 versions of the sentences. We finally want to control whether some participants see a given sentence in the first or second block, hence the two files.

We thus randomly assign participants to one of these 4 versions, or lists to ensure that we have properly counterbalanced the study.

These contain the trial information:

list: Which list of items we will use to display sentences in the main phase of the study.

block: Which block of the study this data belongs to. There are two blocks in total. In the second block we reverse the buttons used to indicate a correct and incorrect response for counterbalancing.

phase: This indicates which phase of the data the item belongs to. This is ‘prac’ for the practice phase and ‘exp’ for the main, experimental phase of the study.

item: The number for the item corresponding to the sentence for each trial.

sensibility: Whether the sentence makes sense (sensible) or not (nonsense).

concreteness: whether the sentence describes a concrete or abstract scenario. For example, a concrete sentence might be ‘You dealt the cards to Chantelle’, while abstract might be ‘Umaira gave you another chance.’ If the sentence makes no sense, this is NA.

type: Whether the sentence describes transfer of something from one character to another (tranfer) or not (no_transfer). If the sentence is nonsense, this is NA.

direction: If a sentence describes transfer, does the described transfer occur from a character to you (towards) or from you to the character (away). If no transfer occurs or the sentence is nonsense, this is NA.

sentence: The sentence to be displayed on screen to participants.

correct_response: The correct key on the keyboard that participants should press to respond if the sentence makes sense or not. For some participants the first block will make sensible items a W and nonsense items an S. For others this will be reversed.

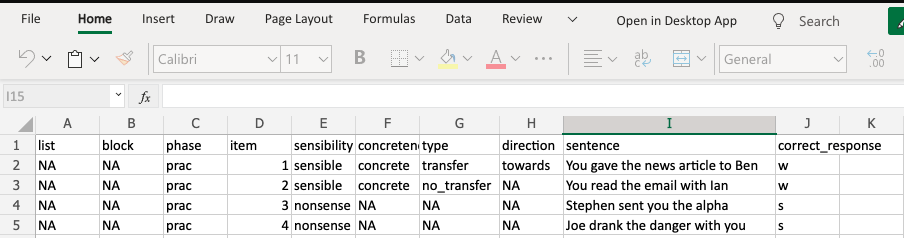

We currently only have the trial list for the experimental blocks. We will now create our own practice trial list. To do this create a .csv file in Excel, Google Sheets, or any speadsheet document you’re comfortable with and call this prac_trials. Next, create 10 columns with the same names as those in the experimental trials (in bold above).

In the sentence column, you can add the following sentences in order.

You gave the news article to Ben.

You read the email with Ian.

Stephen sent you the alpha.

Joe drank the danger with you.

These will be the sentences participants will see on screen across four practice trials.

Fill out the remaining details here using the information in the screenshot below.

21 - The information to add to the prac_trials.csv file.

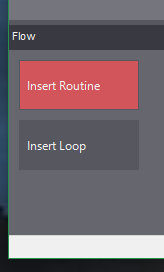

Now that we have created the practice trial, we need to add a loop to the experiment. We do this by clicking Insert Loop then inserting where we want it to begin and end on the timeline. Here, we want it just before the Fixation_Cross and right after the Sentence_Screen components.

22 - Inserting a loop.

Rename the loop to prac_trials. We will then set some important information for the loop. First, in the popup menu set the nReps to 1, then click the folder icon next to the conditions section. Select your prac_trials.csv file and then click OK.

We have now loaded our trial information for the practice section into PsychoPy and through nReps ensured that people only see this entire table of trials once.

23 - Selecting the variables for the practice trial loop.

As of right now, nothing different will shown on the experiment, other than it looping four times. This is because we have not told PsychoPy which parts of the experiments need to be updated on every iteration of the loop.

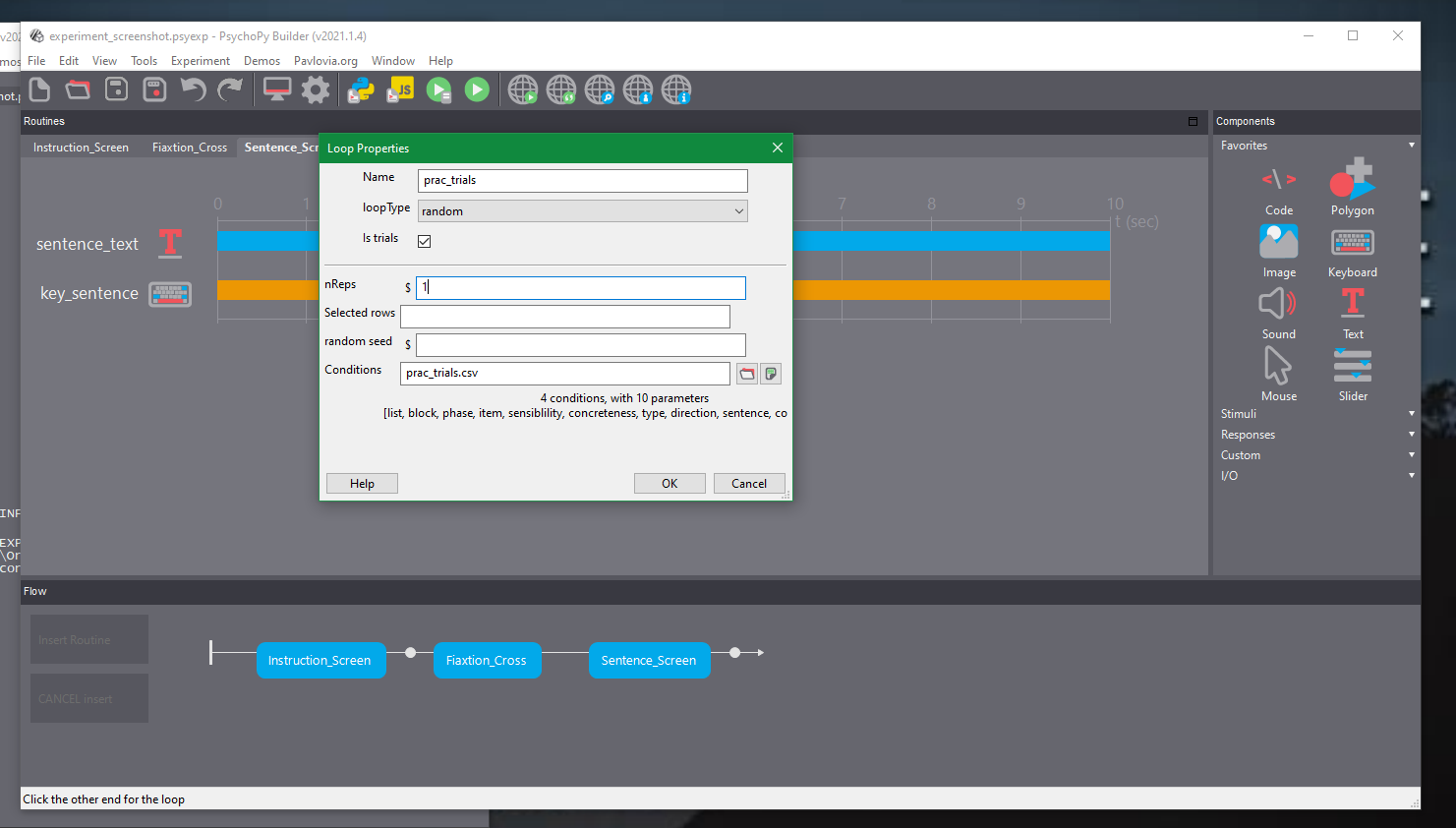

To make the sentences update and the correct response vary for any given trial we need to set our variables.

First, to update the sentences we need to go back to the trial screen and into the text component. We will then delete Test Sentence from the Text box and write $sentence. We will then change the text from constant to set every repeat. (This option is a dropdown menu right next to the Text option in the popup.)

The dollar sign signify that PsychoPy should be looking for a variable named sentence (which is in the excel file). Setting the sentence to set on every repeat means that for every iteration of the loop PsychoPy will select a different line in the excel file to read from.

24 - Updating our sentences in the practice loop.

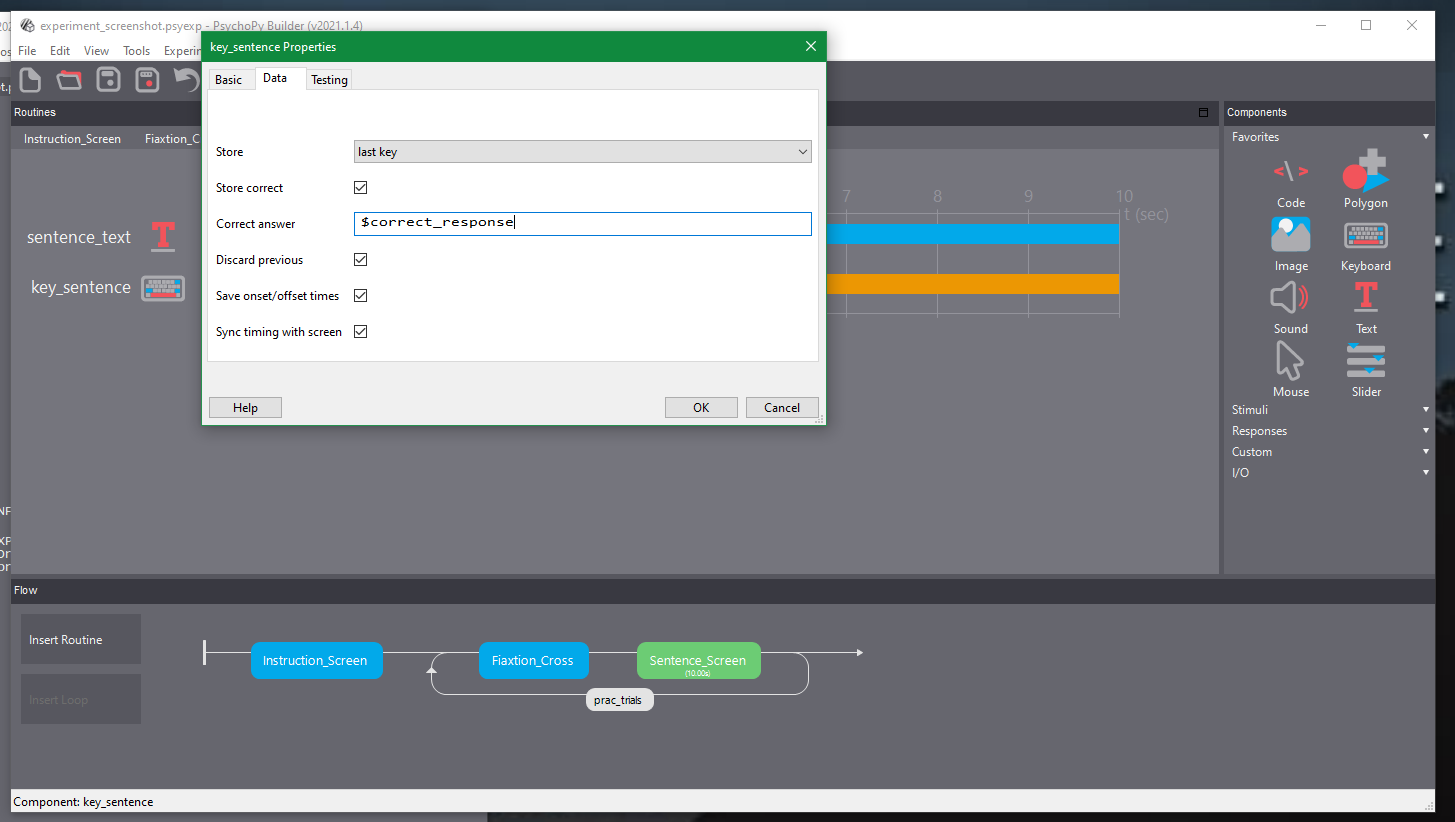

To update the correct responses for each trial we then need to click on the key_sentence component and in the popup menu go to the Data tab. From here, tick the Store correct box, and in the Correct answer box enter $correct_response. This allows the correct response to vary for each trial in the same way the sentence varies; PsychoPy looks in the correct_response column in the prac_trials.csv file and pulls out the entry for a given trial as the correct key participants should choose.

25 - Updating the correct answer in the practice loop.

We should now run the experiment to test that the sentences are updating every loop. Do this by pressing play in the same way as we did earlier.

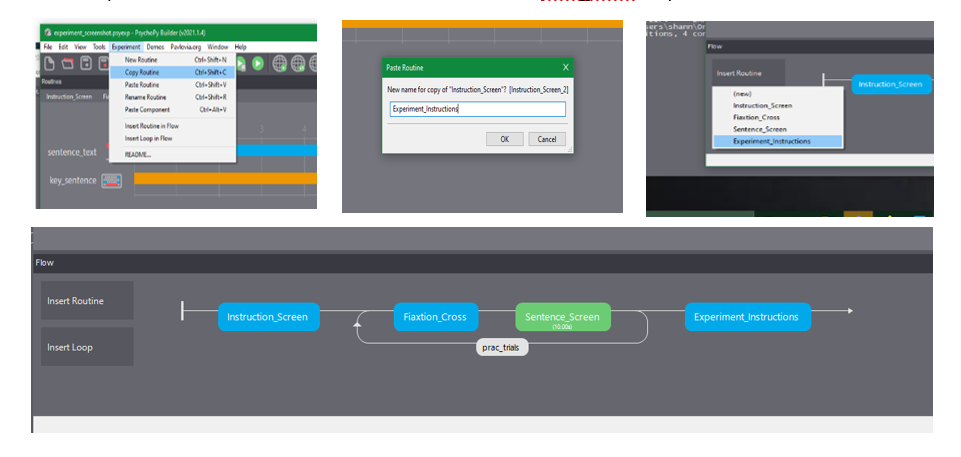

After the practice loop we want to show the instructions again. Our first step is to copy the Instruction_Screen routine. First click on the Instruction_Screen routine, then select the Experiment menu at the top of PsychoPy, and click Copy Routine. Then click Paste Routine. You will be prompted to rename the screen. Please rename it to Experiment_Instructions. Then go to the Experiment tab again, click Insert Routine in Flow, click on Insert Routine in the Flow panel, and place it at the end of the timeline outside the prac_trials loop.

26 - Inserting the new instructions in the timeline.

Now we need to add an experimental loop. Click on Insert Routine in the Flow tab. Then click on Fixation_Cross and add it to the timeline after the Experiment_Instructions routine. Do the same for the Sentence_Screen.

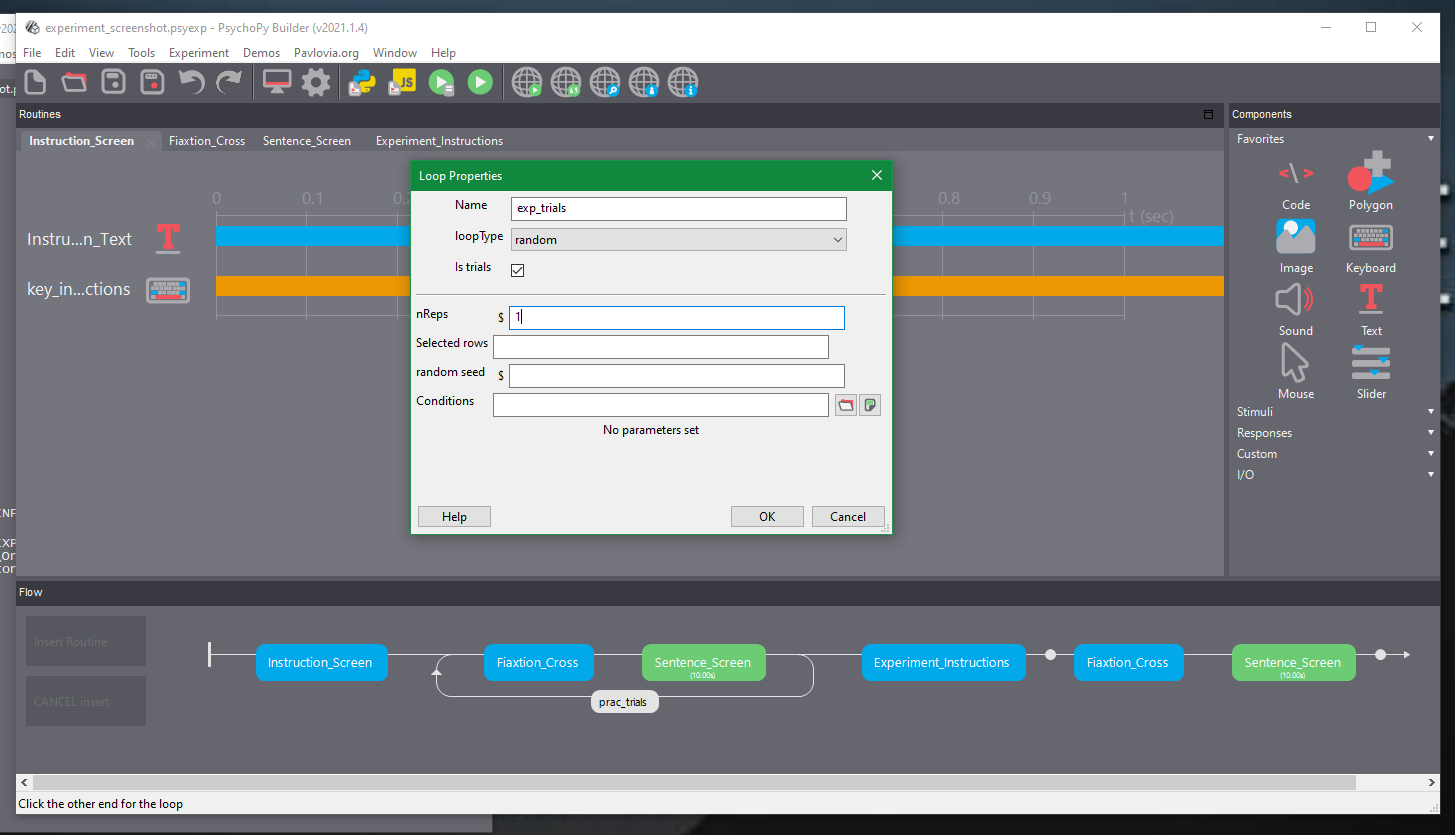

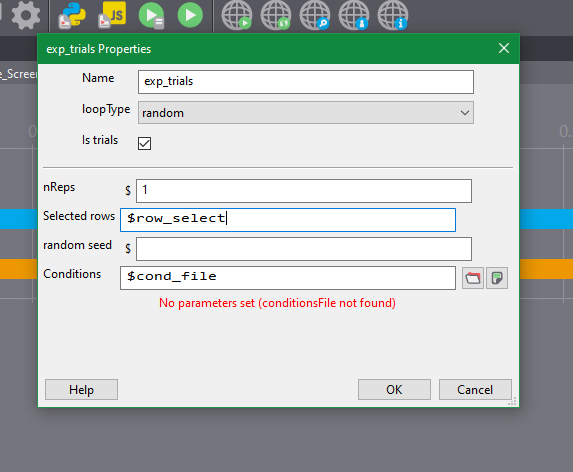

Next, insert a loop for the experimental trials. In the Flow tab click Insert Loop. Set this to begin before the Fixation_Cross and to end after the Sentence_Screen. Name this loop to exp_trials, and then set the nReps to 1.

27 - Inserting the main experiment loop.

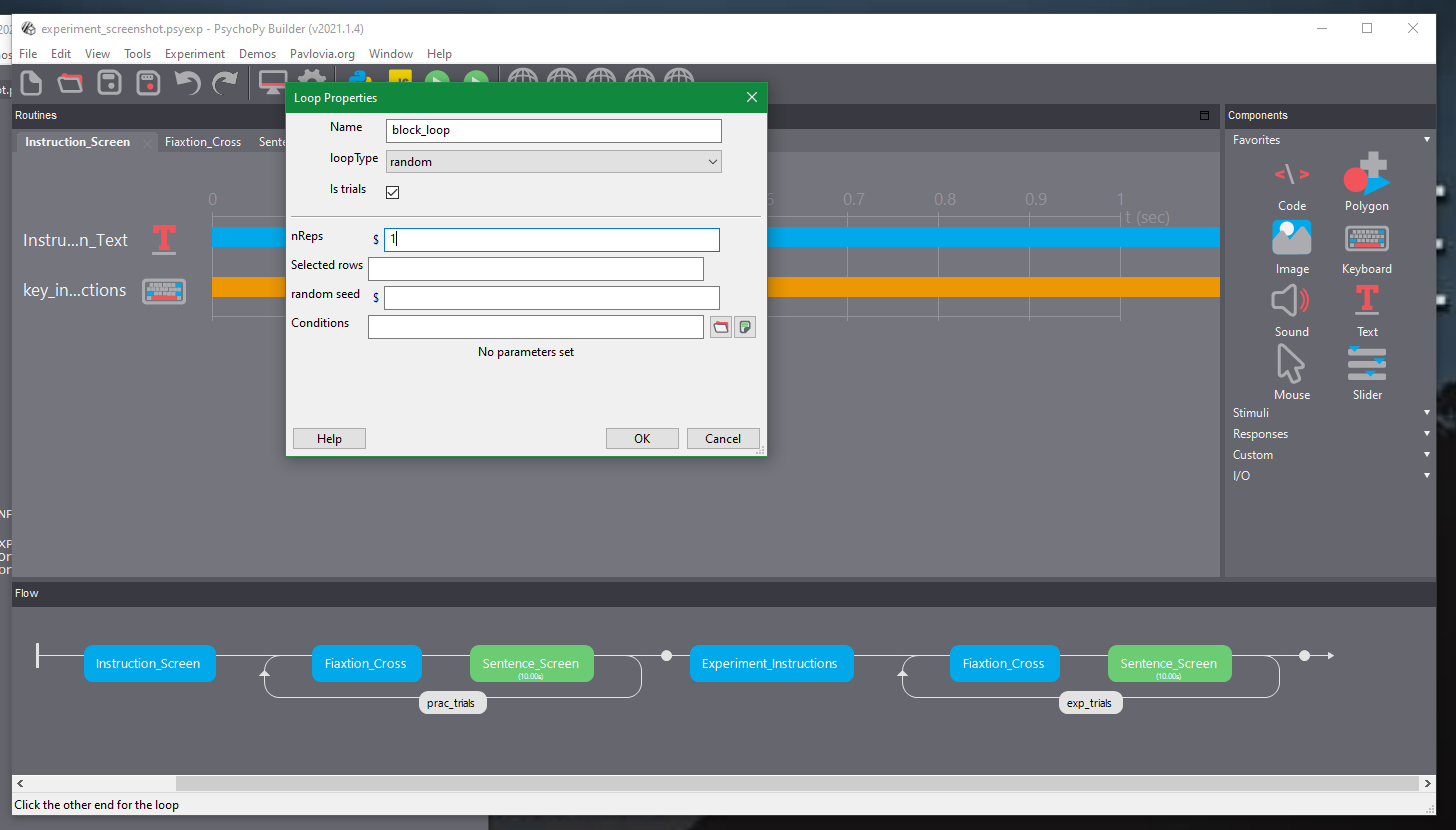

Because we have two blocks we want to show, we’d like to repeat showing the instructions along with the experimental block loop. To do this, add another loop around the Experiment_Instructions routine and the exp_trial loop by clicking Insert Loop. Name this loop block_loop and set the nReps to 1.

This will ensure that for each block of the study, participants will see the instructions (which varies across blocks). Within each block participants will see a fixation cross and a sentence screen on every trial.

28 - Inserting the block loop.

No we have the general flow of the experimental blocks of the study, we will have to let PsychoPy know where to access the trial information for each block.

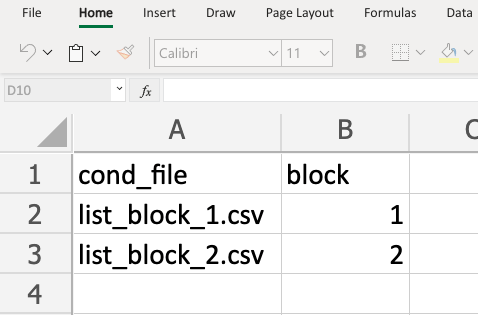

We will now create a conditions file that will update which file the loop will use on each iteration. Create a .csv file as before and make two columns with the following titles and contents:

cond_file: this has the entries list_block_1.csv and list_block_2.csv. This will change which conditions file each block loop is reading from.

block: this has the entries 1 and 2. This column keeps track of which block has been picked so we can use this in assigning the correct instructions for a given block.

Save the .csv file as cond_files.csv.

29 - Creating cond_files.csv for the block_loop.

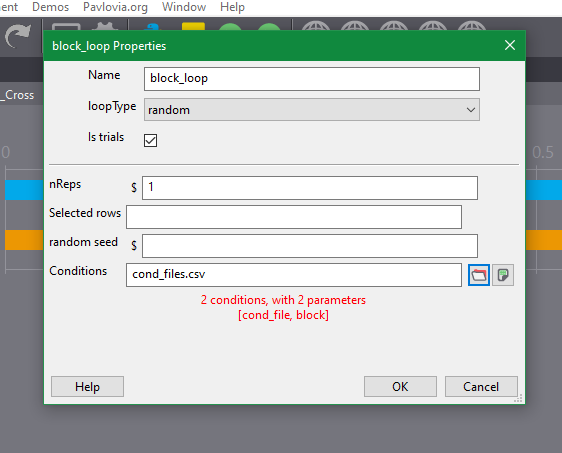

Click on the block_loop label in the timeline. You should see the block_loop Properties popup window. Next to the Conditions line, select the folder icon and choose the cond_files.csv and loop type to be sequential . Click OK.

30 - Setting the block loop properties.

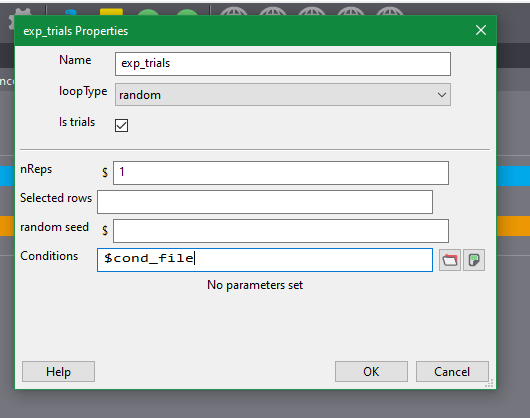

Next, click on the exp_trials loop label in the timeline. In the Conditions section type $cond_file.

Now, the block_loop randomly selects the order of the blocks, and thus which item list should be selected for a given block. By choosing the conditions file to be decided by whatever the block_loop picked, we allow the exp_trials loop to randomly select items only from the correct .csv file for any given block.

Now, our blocks are randomly ordered for any given participant: some see the item list named ‘list_block_1.csv’ first, while others see ‘list_block_2.csv’ first. Crucially, the presentation of trials within these blocks is also randomly ordered.

31 - Setting the exp_trials to use dynamic updating of the item list.

Custom Code

Remember that each file of items (i.e. list_block_1.csv and list_block_2.csv) has separate lists within it. These lists essentially have the same items within them, only with different versions which reflect the four combinations of direction of movement in the sentence (i.e. towards or away from the participant) and the button participants use to select a correct response (i.e. W or S). (Look at list_block1.csv and compare item 1 in list 1 and list 3 to see how this works.)

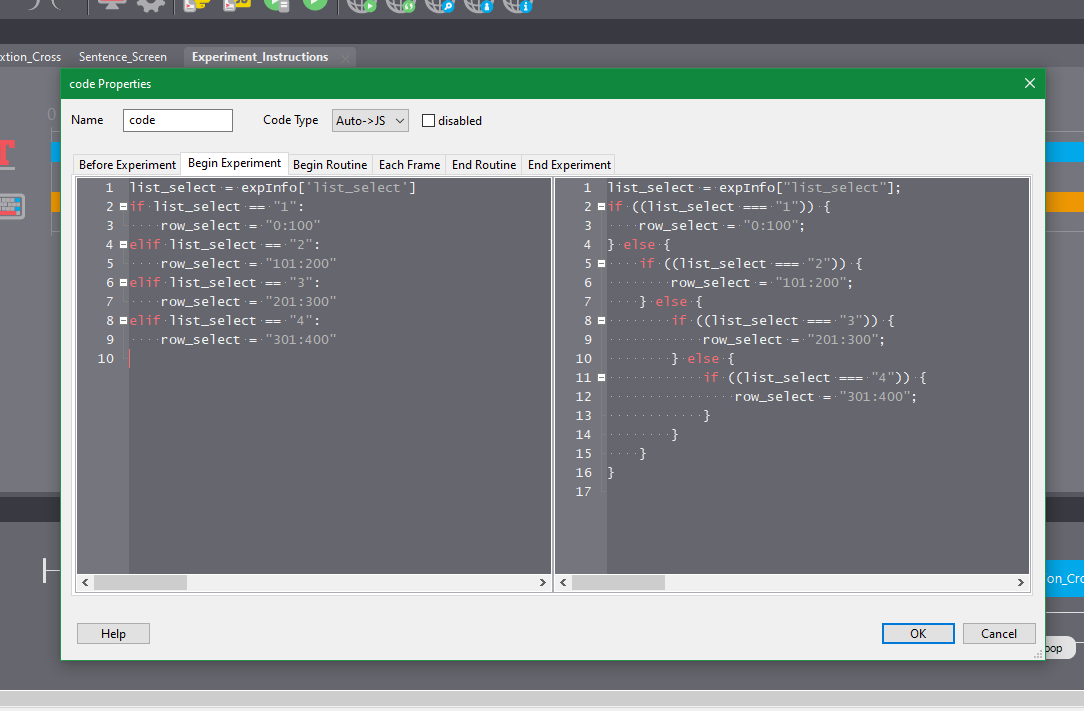

We don’t want to show participants the same item 4 times. Instead, want them to only see 1 version of each item. So we need some custom Python code to (1) assign participants to a given list, and (2) subset the list of items to only those from the participant’s list.

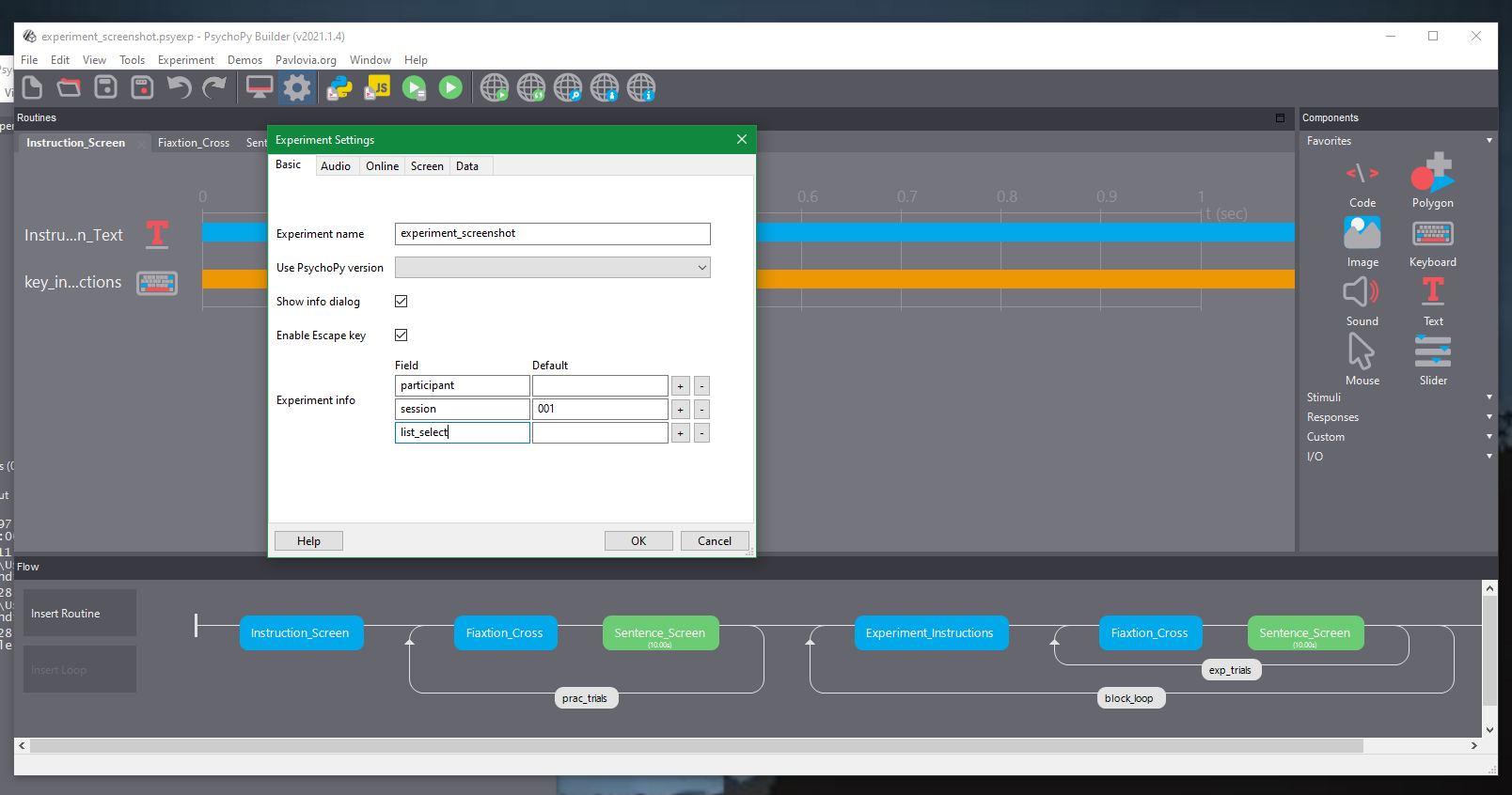

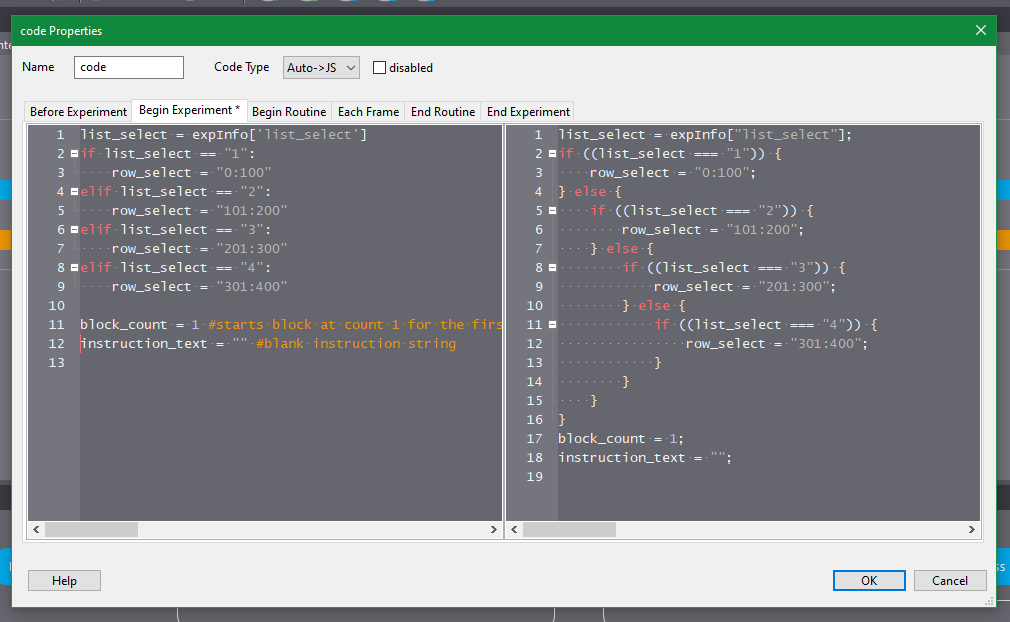

We will focus on how to randomly assign a list in Qualtrics later. For now we will manually select which list to select using the expInfo options within the experiment settings. Access the experiment settings by clicking the big cog symbol at the top of the PsychoPy interface. You will see popup menu for the settings. In the Experiment info row, click the + symbol to create a new data field. In the field column, name the data field list_select. Leave the Default value to to blank. Click OK. This now makes the experiment ask which list to use at the beginning of the study.

32 - Accessing the experiment settings and creating the list_select field.

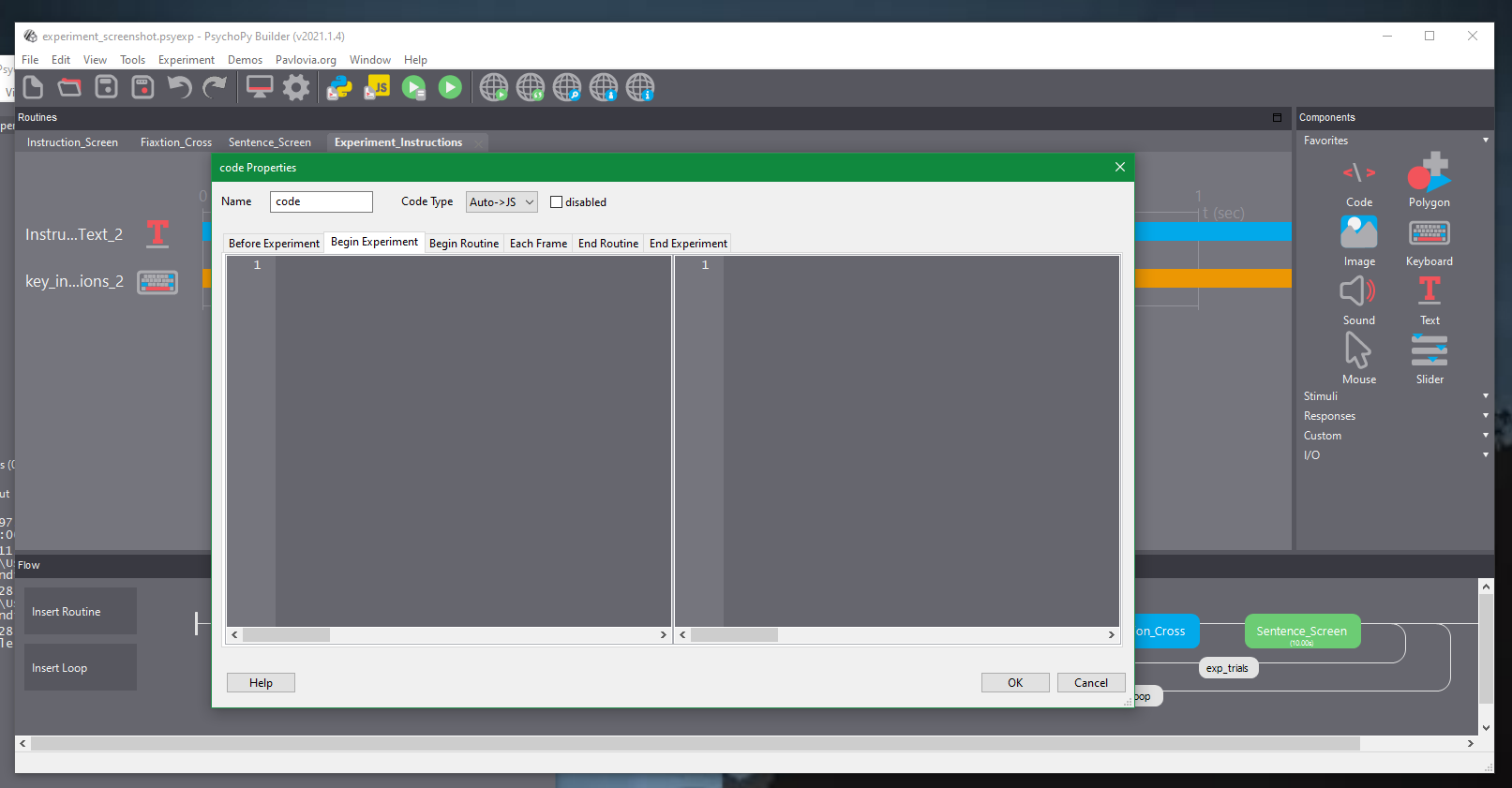

Now we will add a custom code component. First, click on the Experiment_Instructions tab. Then, in the Custom field within the Components menu, choose Code. You will see a popup window.

33 - Adding the custom code component.

34 - Since the study now asks us which list to use at the beginning of the study, we can use this input to filter our items down to only those for the correct list of items. That’s what this code does. It checks which list is selected and then keeps rows only for the items associated with that list.

In the popup window, click on the Begin Experiment tab. Paste in the following code and then click OK.

35 - Adding the code to the Begin Experiment tab.

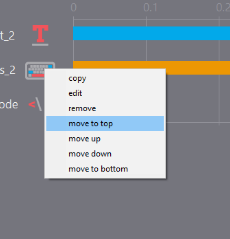

Right click the code component and select move to top. This will make the code run at the beginning of the routine before participants see anything on screen.

36 - Reordering the code component.

Now that we have determined which rows we would like to keep from the item list for each participant, we need to apply the subsetting of the list within the experimental trials loop.

Click on the exp_trials loop and in the exp_trials Properties popup window type $row_select in the Selected rows box. This adds a variable to the loop which allows it to filter the list of items down to only those in a given list.

37 - Applying the list filtering to each conditions file.

Next we need to add some meaningful instructions to the experiment. We will start with the practice instructions. We want our instructions to explain what the participant is expected to do. Select the Instruction_Screen routine and click on the text component there. Replace the text in the Text box with the following:

Read the sentence and decide if it is sensible.

Press W for Yes

Press S for No

Press Space when you are ready to start and to progress through each screen.

38 - Adding instructions to the practice trials.

Since the experiment has one key for a sensible answer in the first block (e.g. W), and then reverses the key for the second block (e.g. S), we need to dynamically update our instructions for each block.

These answers will be based on the correct_response column in the conditions files list_block_1 and list_block_2.

For block 1:

List 1 and 2 are W for Yes and S for No

List 3 and 4 for W for No and S for Yes

For block 2:

List 1 and 2 are W for No and S for Yes

List 3 and 4 for W for Yes and S for No

We achieve this by create another conditional statement that checks both which list we are using and which block we are in and then assigns new instructions based on this.

Click the Experiment_Instructions routine then click on the code component. In the Begin Experiment tab paste the following under your existing code:

block_count = 1 # starts block at count 1 for the first block

instruction_text = "" # blank instruction stringMake sure to copy and paste the code into the correct tabs as we will want to create a variable before the routine starts and execute code at the beginning and end of the routine. If the new code is indented from the side of the window then your code won’t work.

Your final code in this section should look like this:

list_select = expInfo['list_select']

if list_select == "1":

row_select = "0:100"

elif list_select == "2":

row_select = "101:200"

elif list_select == "3":

row_select = "201:300"

elif list_select == "4":

row_select = "301:400"

block_count = 1 #starts block at count 1 for the first block

instruction_text = "" #blank instruction string

39 - The final code in the Experiment_Instructions component.

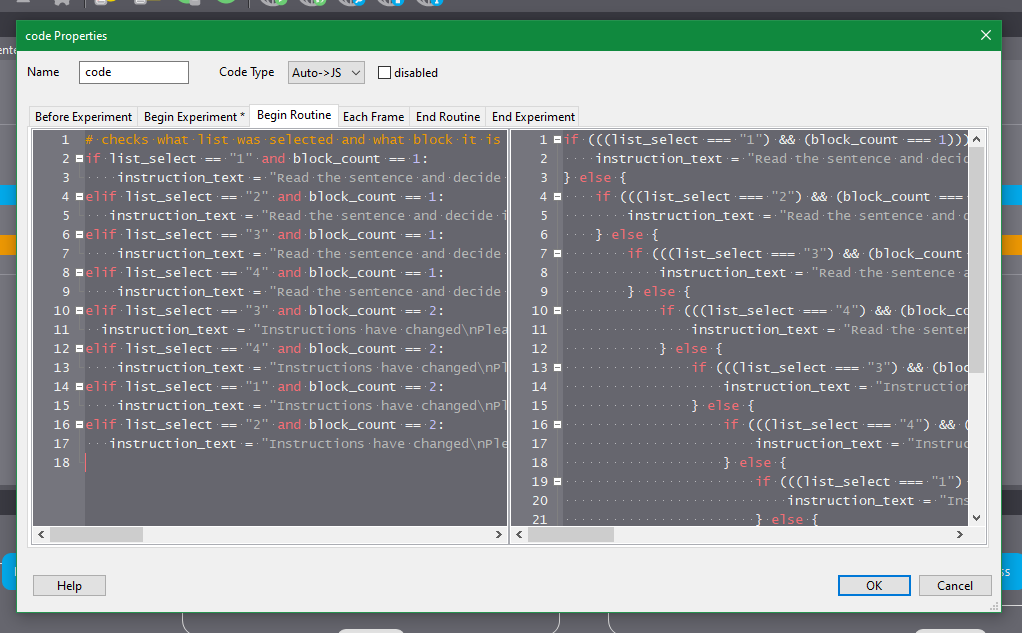

Next, we’ll allow the experiment to update the instructions within each block. Here, we ask the program to check which list of items has been selected at the start of the experiment, and which block participants are in. We then update the instruction text to the relevant instructions for the participant. Note that in the text that we input we use “\n” to create a new line.

In the Begin Routine tab of the code properties paste in the following code:

(Note: This code could be easily improved by printing the string using variables to change out the relevatn parts. Since this is for those new to Python it is kept intentionally verbose.)

# checks which list was selected and what block it is

if list_select == "1" and block_count == 1:

instruction_text = "Read the sentence and decide if it is sensible\nPress W for Yes\nPress S for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "2" and block_count == 1:

instruction_text = "Read the sentence and decide if it is sensible\nPress W for Yes\nPress S for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "3" and block_count == 1:

instruction_text = "Read the sentence and decide if it is sensible\nPress S for Yes\nPress W for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "4" and block_count == 1:

instruction_text = "Read the sentence and decide if it is sensible\nPress S for Yes\nPress W for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "3" and block_count == 2:

instruction_text = "Instructions have changed\nPlease Read carefullynnRead the sentence and decide if it is sensible\nPress W for Yes\nPress S for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "4" and block_count == 2:

instruction_text = "Instructions have changed\nPlease Read carefullynnRead the sentence and decide if it is sensible\nPress W for Yes\nPress S for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "1" and block_count == 2:

instruction_text = "Instructions have changed\nPlease Read carefullynnRead the sentence and decide if it is sensible\nPress S for Yes\nPress W for Non\nPress space bar to start the experiment and progress through the screens"

elif list_select == "2" and block_count == 2:

instruction_text = "Instructions have changed\nPlease Read carefullynnRead the sentence and decide if it is sensible\nPress S for Yes\nPress W for Non\nPress space bar to start the experiment and progress through the screens"

40 - Adding instructions to the Begin Routine tab of the code property.

Next, we need to make sure we have a variable that keeps track of the block number. This is needed so we can give participants the correct instructions for their list in the first block, and then change the instructions in the second block. We’ll make a variable called block_count that is set to 1 and that increments its value by 1 after the end of each block.

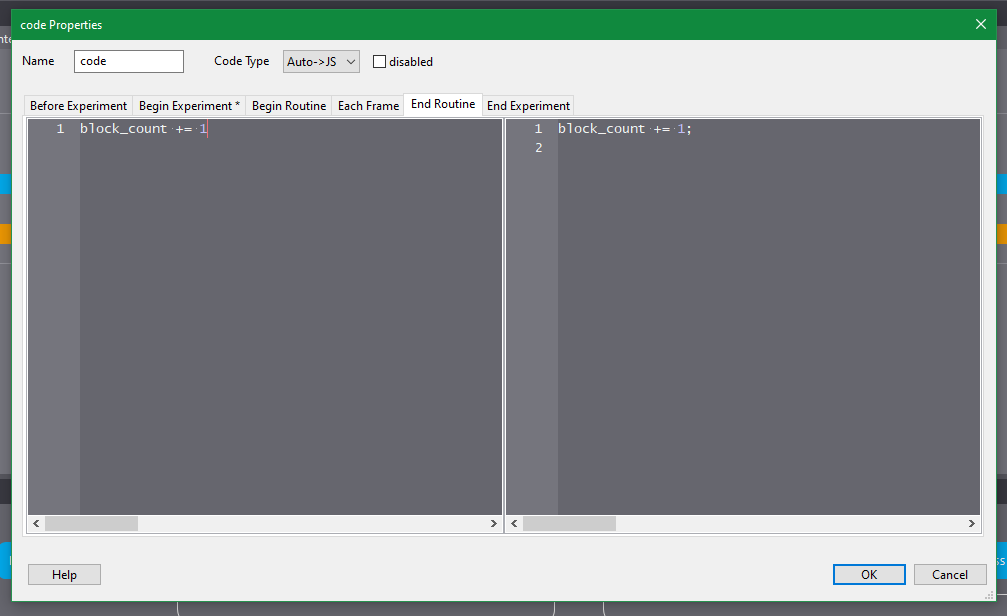

In the End Routine tab of the code property paste the following code:

block_count += 1

41 - Adding code that increments the block number by 1 at the end of each block.

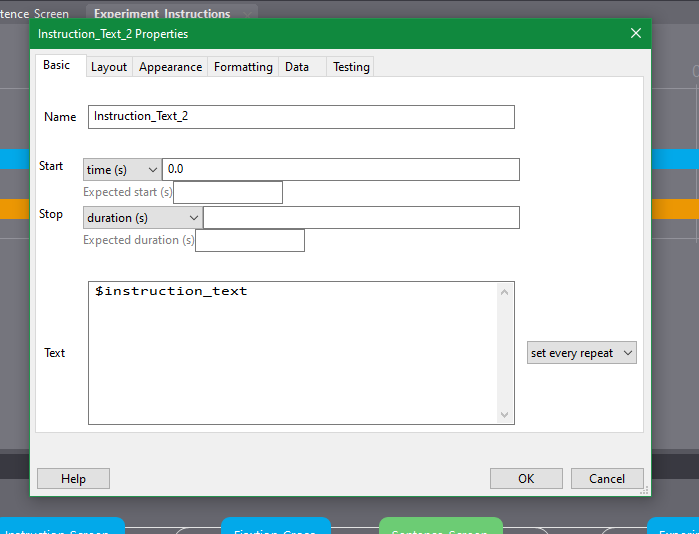

Finally, we will change the text in the Experimental section of our study. In the Experiment_Instructions routine click the text component. Change the text in the text block to the following code:

$instruction_textNext, on the dropdown menu to the right of the text block change the selection from constant to set every repeat. This means that the experiment will update the instructions for every iteration of the block loop.

42 - Setting the experiment instructions text to dynamically update depending on list and block number.

Now run through the experiment to see if everything is working alright.

Once you can confirm it is working fine, we will get the experiment to work online. To run an PsychoPy experiment online, we need a Pavlovia account (go to https://pavlovia.org/ and create an account with your student email. If you’re registered at a University with a site license (such as at the University of Sunderland) you should register using your student email. Pavlovia will automatically apply the University site license to your account so you don’t need to pay for data collection.

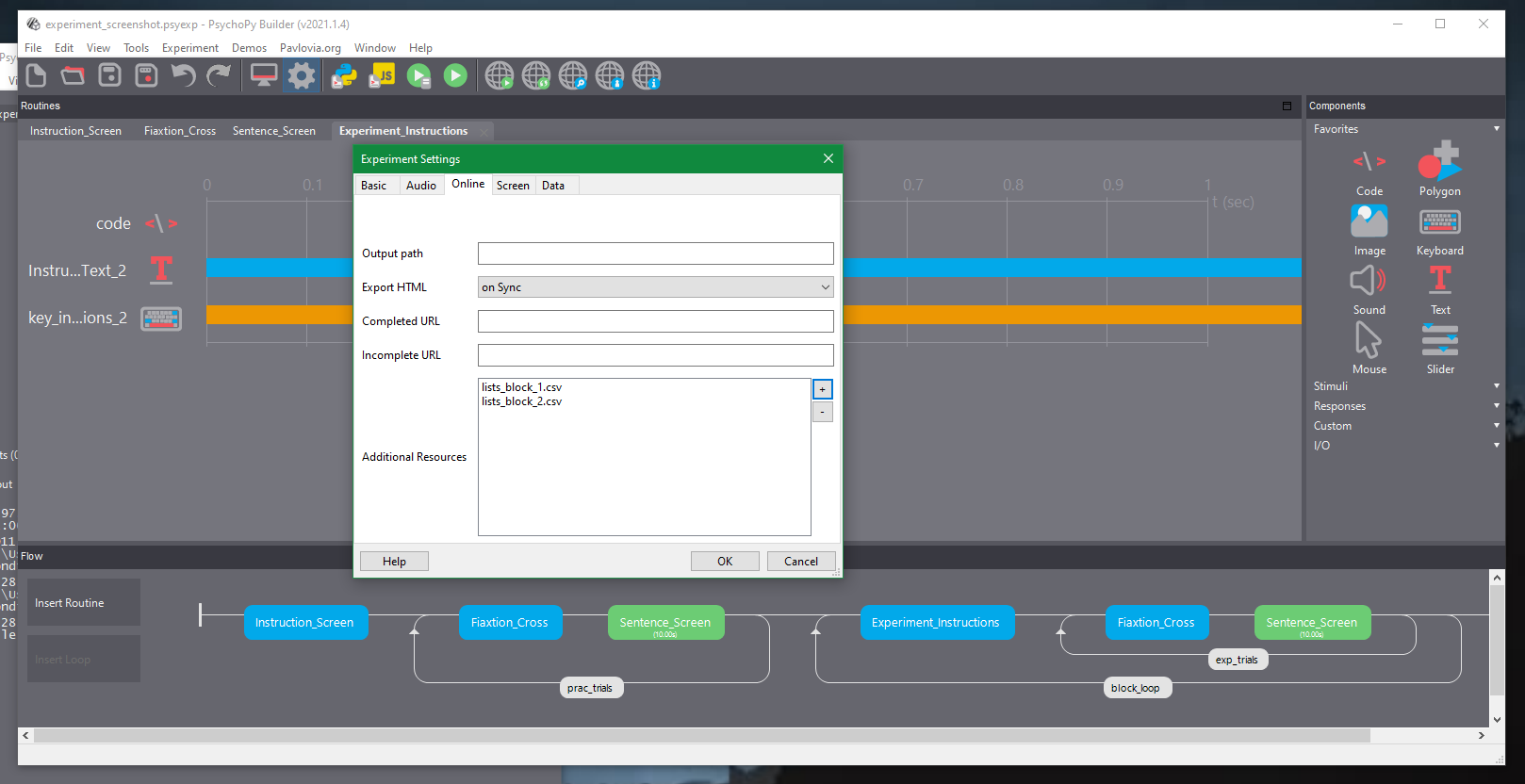

Before we can get our experiment working on the Pavlovia servers, we need to make sure PsychoPy knows to send all the conditions files to the servers. When we select our files on the computer from within subfolders sometimes they aren’t automatically uploaded, so we need to do this manually. We do this by clicking on the settings icon (the cog) at the top of the PsychoPy interface and going to the Online tab. Click the + symbol to the right of the Additional Resources box and select the files list_block_1.csv and list_block_2.csv. Click OK and save your work.

43 - Adding the conditions files to the additional resources before deploying the study online.

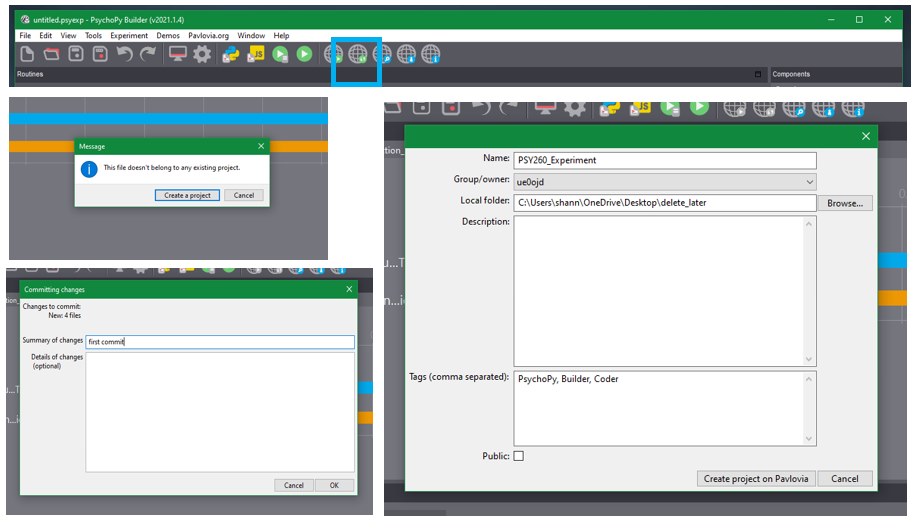

We are now ready to get the experiment working online. Click the internet symbol (webbed globe) that has the sync button next to it (the second in the selection). A popup window will ask you to sign in to Pavlovia. Do so and another window will state “This file doesn’t belong to any existing project.” Click the Create a project button.

Name the project PSY260_Experiment. Write a description for the project to help you remember what this is about. When you’re done, click Create project on Pavlovia.

A new window will ask you to Commit your changes for the study.

This is a concept from version control systems. Basically, you put your files in a repository that is synced online. When you make changes to your study/programming code, you can save then and then commit them to the project. Once these changes are pushed online to the remote version of the repository your project is updated. Crucially, you will always have access to previous versions of your work. No more creating several versions of your work with obscure names!

Write a summary of your changes. In this case you could just make this “first commit”. Any further commits should describe what you’ve changed. Add details to any commits as and when you please to help you remember what changed.

44 - Syncing your study with Pavlovia. Creating a project, providing a description, and then commiting your study.

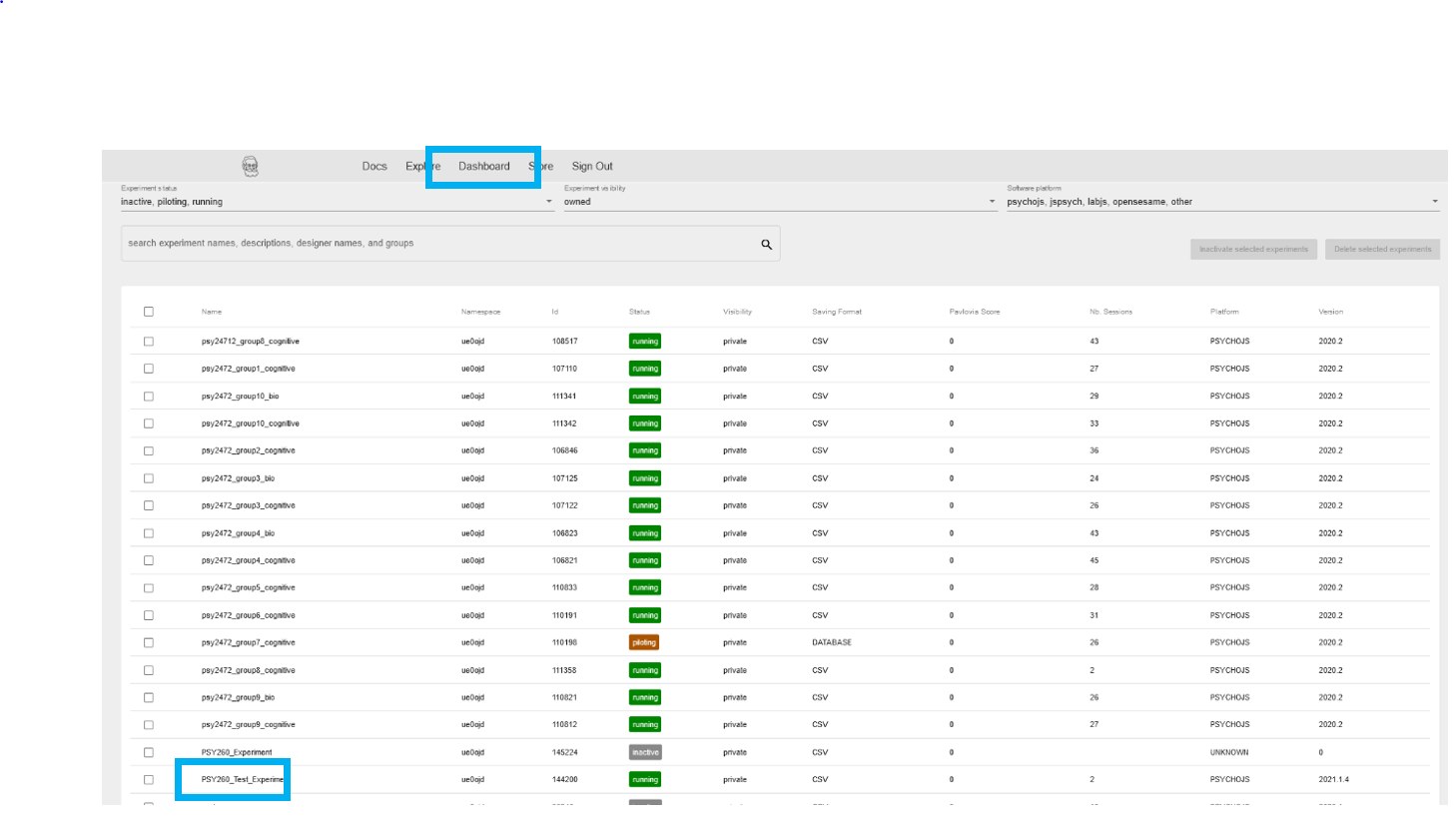

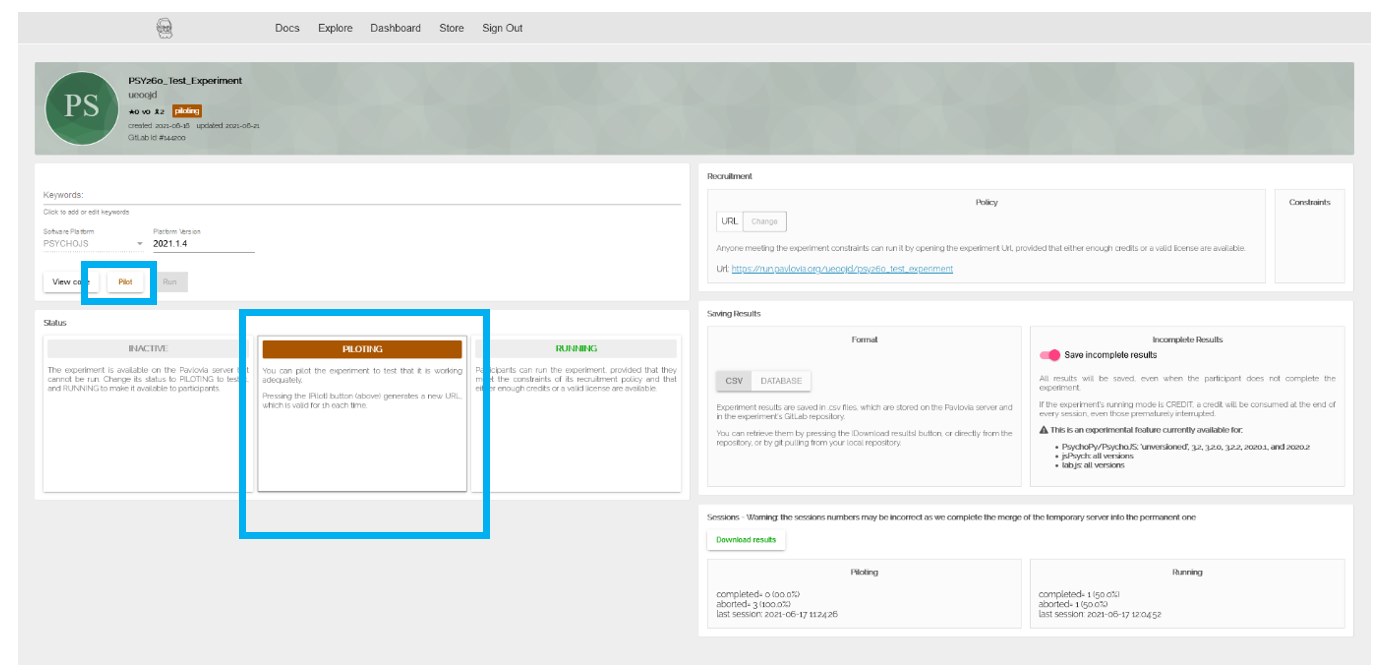

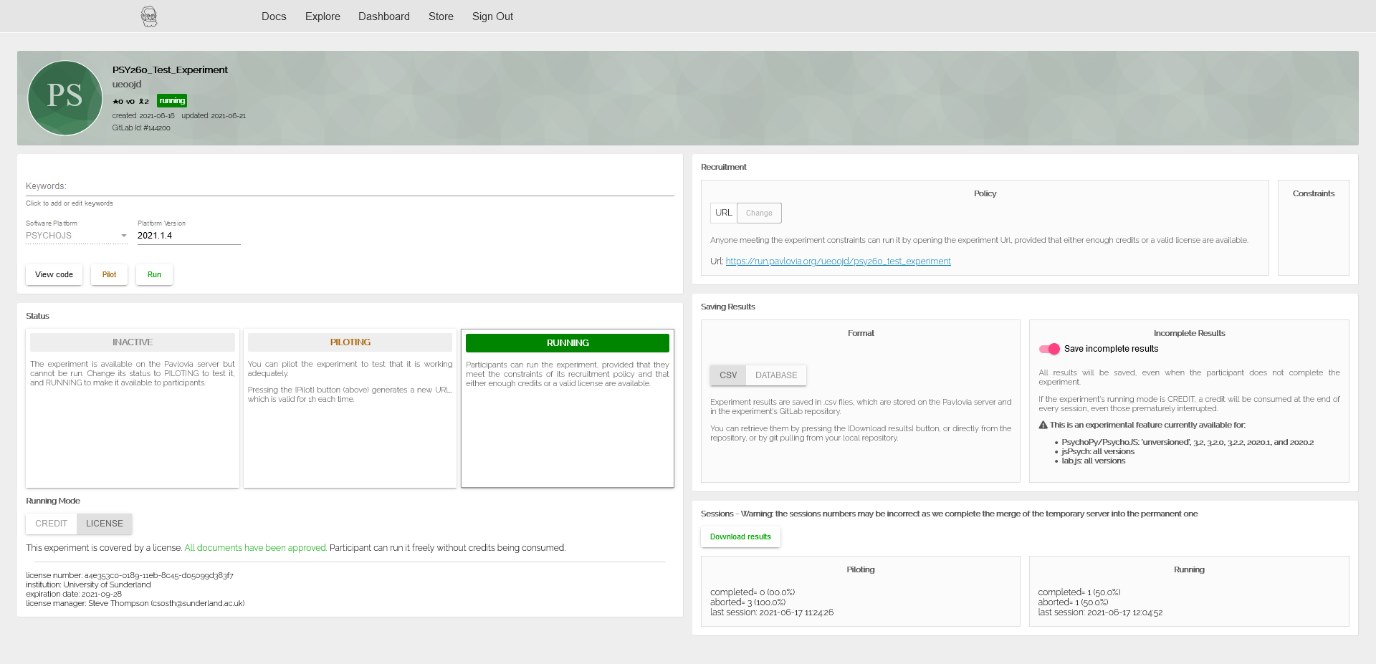

Now go to https://pavlovia.org/ and set the experiment to pilot. You can use this to check that your experiment runs in the way you expect. This is an important step before you release it for other people to take part.

To do this go to the Dashboard, then Experiments and select the experiment you have just created.

45 - Selecting your experiment in the Dashboard.

One your study page loads select the Piloting option for the study. This will make the study active for one hour at a specific link so you can test it works properly.

Click the button that says Pilot to run your study in the browser and test it out. Make sure it behaves how you expect before you progress.

46 - Piloting your study.

If you need to change your study, do so and then sync the changes with Pavlovia again by clicking the web sync button and committing your changes.

Linking the Experiment Online with Qualtrics

Now that we have the experiment up and running on Pavlovia we need a way of showing participant information sheets, consent forms, debriefing sheets, and any other information we would need to collect that does not require experimental controls. This is easier to do within Qualtrics. We will start by creating a survey that will show the information sheet, consent form, assign a unique participant code, randomly assign participants to a list, and collect demographic information.

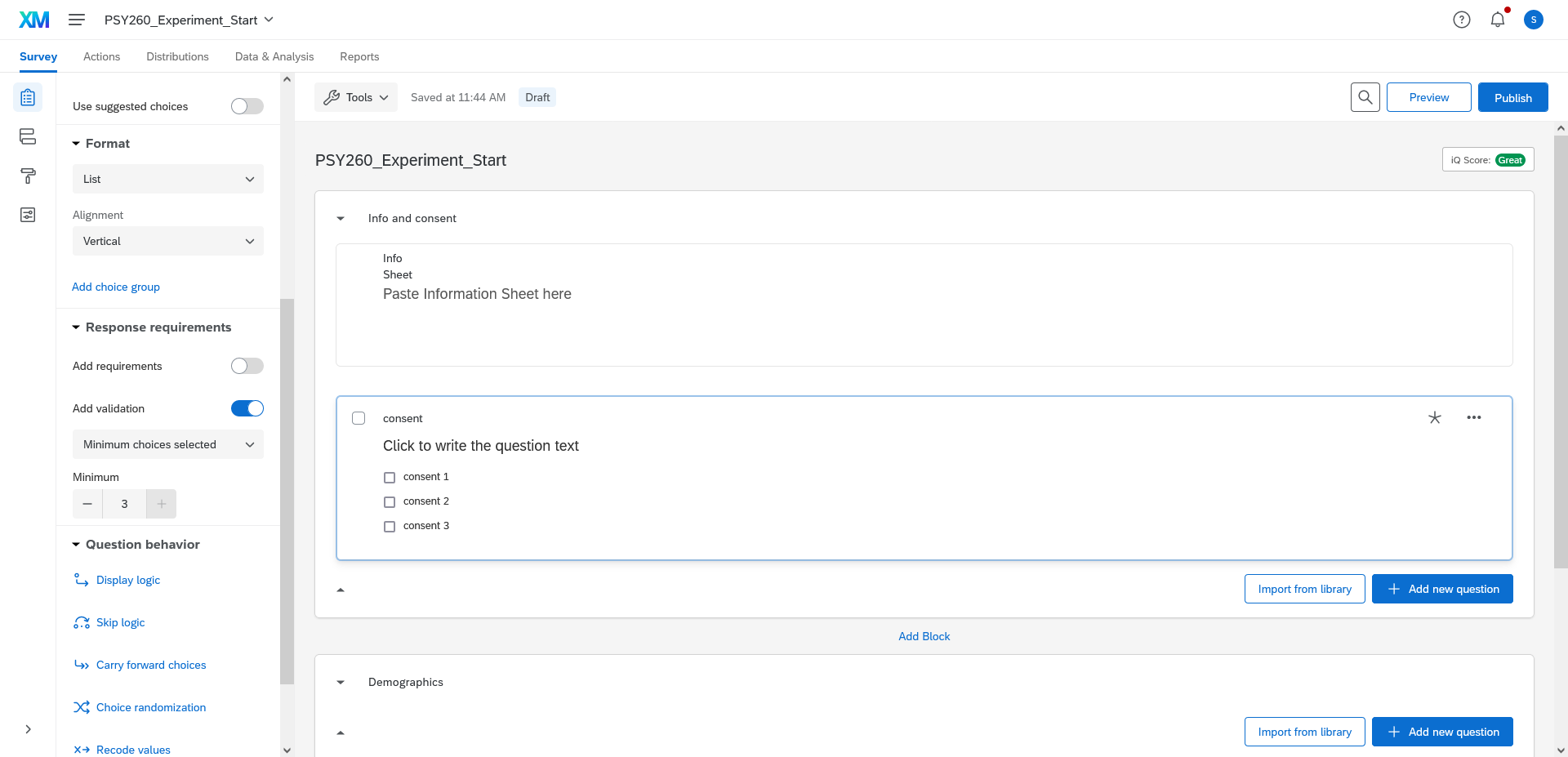

Go to Qualtrics. At the University of Sunderland we have a site license for this, which I will use by navigating to https://sunduni.eu.qualtrics.com. You will have to use your relevant site domain or create an account at https://www.qualtrics.com and create a new survey by selecting Survey under the From Scratch heading. Name it PSY260_Experiment_Start.

We will add 2 blocks of questions to the survey, one for the participant information sheet and consent form and the other to collect demographic questions.

For the information sheet choose Add Block, then Add New Question, and then make this Text/Graphic. Drag this to the top of the survey. This will be the landing page with the information about the study. Give the block a title (e.g. introduction), and change the question shorthand to info_sheet. In the text entry area just add some placeholder text for now.

The consent form can be adapted from the default question set. Change the block name to Consent. Change the question shorthand to consent. In the Format option in the sidebar to the left, change the format to multiple-choice and the Answer Type to Allow multiple answers. In the Response Requirements heading to the left side of the window switch on Add validation. Increase the minimum choices selected value below this switch to the number of multiple choices you’ve included. This ensures that participants can only progress with the study if they agree to all of the consent questions. If participants do not agree fully to the consent questions, they will not be able to take part.

47 - Adding consent questions with response requirements selected and 3 choices as the minimum selection.

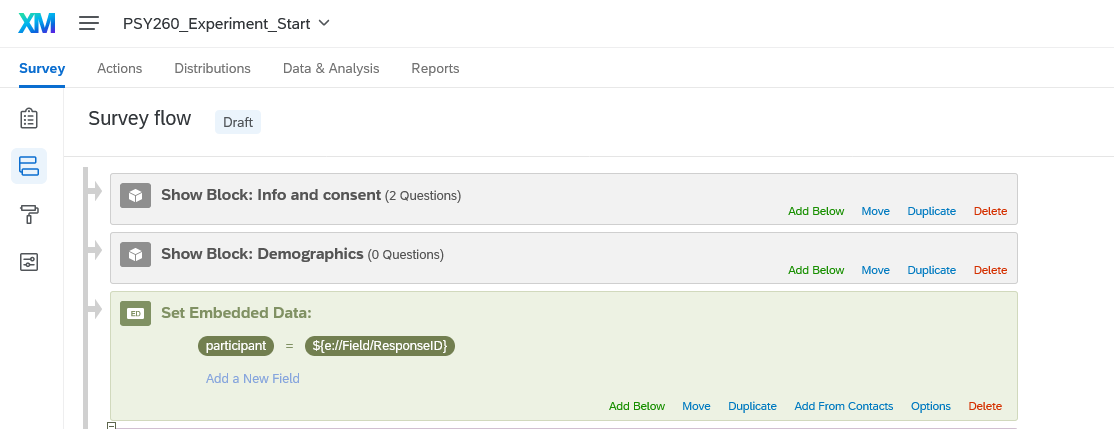

Now we need to make it so participants are assigned a unique participant ID for the study so we can distinguish each participant in the data. To do this we need to go into the survey flow by clicking the Survey Flow button to the far left of the screen (highlighted in blue in the image below).

We will add some embedded data to the survey so we can pass unique participant IDs to Pavlovia. Select Add a new element here and select Embedded Data. Type in the name participant, then click Set a Value Now and paste in the following code: ${e://Field/ResponseID}. This will make sure your data is properly passed on to Pavlovia.

An alternative is to use the dropdown menu labelled Create a New Field and then Choose From Dropdown. After that choose Survey Metadata and then scroll down to find and select Response ID. This is a unique code that Qualtrics assigns to each participant.

48 - Adding embedded Response ID data to the survey.

Next, we are going to set up the randomisation of the list selection. This means when participants take part in our study they will not pick a list but it will be chosen randomly for them. We could do this in PsychoPy, but we do this in Qualtrics because it keeps tracks of how many participants have already been assigned a list and completed the experiment. Qualtrics can then make sure that the list order is properly counterbalanced and that we don’t overrecruit for one list while underrecruiting for another.

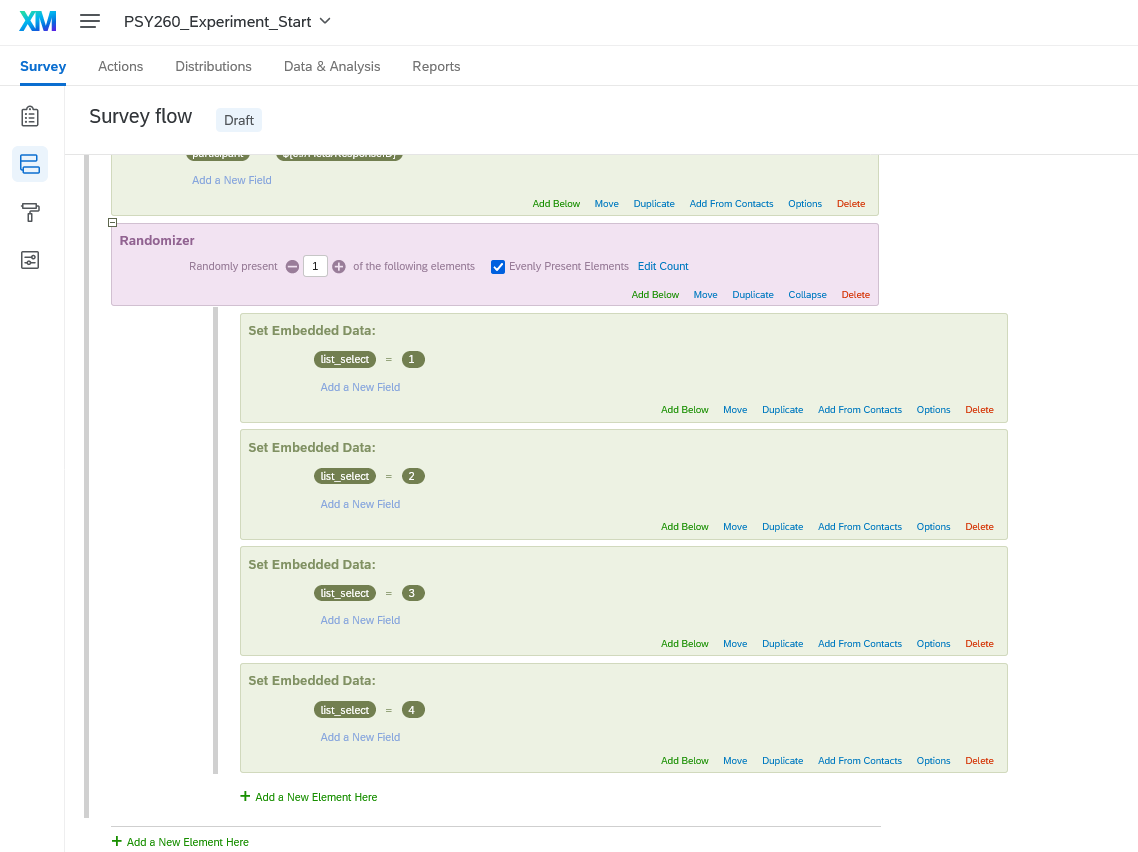

We set the randomisation in the survey flow. Select Add a New Element Here and choose Randomizer. Within the randomiser click Add a New Element Here and choose Embedded Data. Within the box labelled Create New Field or Choose From Dropdown type list_select. To the right of this click Set a value now and type in 1. This means the variable list_select will be given the value 1 if the list is chosen by the randomiser. Do the same three more times, but change the final value to 2, 3, and 4 respectively.

Finally, on the randomiser change the option from randomly present 4 of the following elements to 1. Then check the box labelled Evenly Present Elements. This ensures participants only receive 1 list, and the list assignment is evenly distributed across participants.

49 - Adding the randomiser and each list.

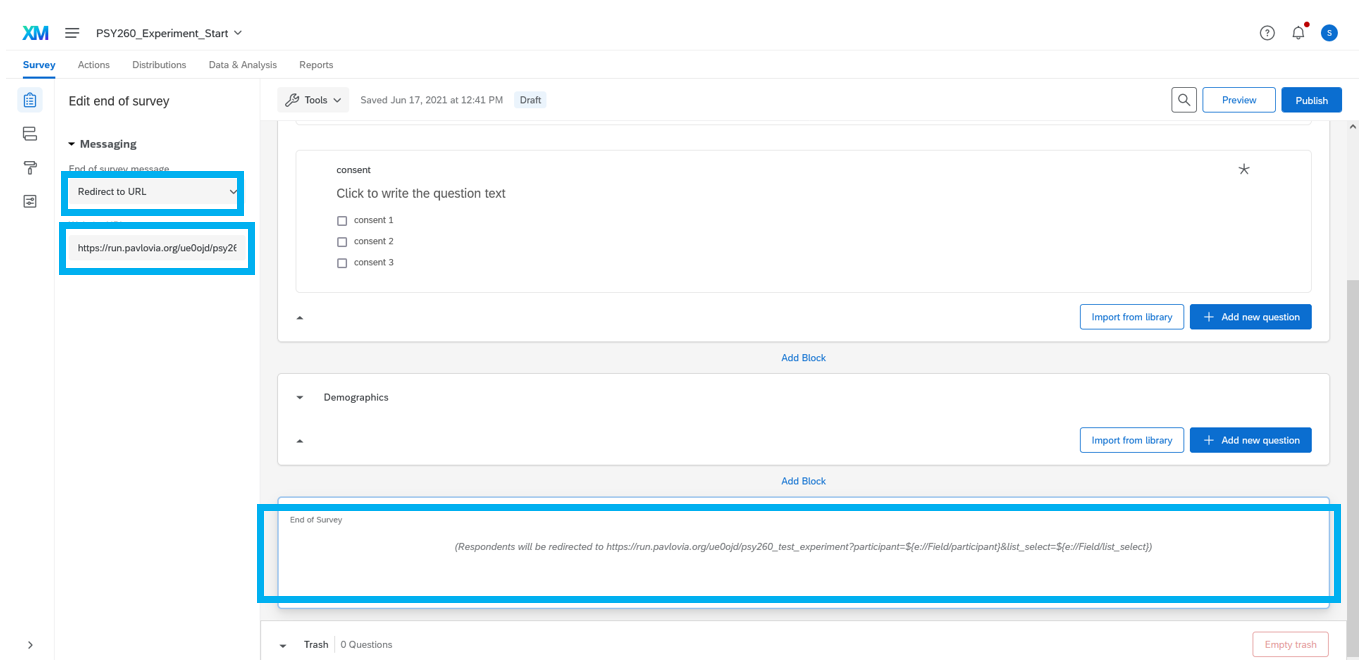

We have now set up all the data that needs to be carried over from Qualtrics to Pavlovia: the participant ID and their selected list. We now need to make sure that we redirect participants to the end of the survey to our experiment on Pavlovia. We will do this using Qualtrics URL redirects. To do this, we need to get the URL from Pavlovia for our study and copy it.

To do this, make sure you’re on your experiment page and change the status of the study from Piloting to Running. You will now see a URL for the study to the right. Copy this.

Go to the your Qualtrics survey. In survey flow click Add a New Element Here, and choose End of Survey. Go back to the main (builder) view of the study. Click on the End of Survey element and in the sidebar change the Messaging to Redirect to URL. Paste your Pavlovia URL in the Website URL box. Then at the end of the URL paste the following:

?participant=${e://Field/participant}&list_select=${e://Field/list_select}The ? defines an HTML query, and the content afterwards allows Qualtrics to pass this data on to Pavlovia. An example of the completed code is shown below, but note this will be different to your URL. Remember to keep only the part in bold and replace the start with your own URL.

https://run.pavlovia.org/ue0ojd/psy260_test_experiment?participant=\({e://Field/participant}&list_select=\){e://Field/list_select}

50 - Adding your URL to the Qualtrics URL redirect.

We will now preview the survey to make sure that it links to our experiment. in Qualtrics, click the Preview button.

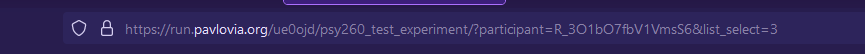

Check that the study works correctly and make any changes as and when needed. Make sure that the link in your browser also contains query values for participant ID and list_select. The participant ID may not be exactly as shown below depending on whether you created it in Qualtrics using the dropdown menu or by pasting our code.

51 - A preview of our URL with queries.

We have linked the information sheet and consent form in Qualtrics to our PsychoPy experiment. We now need to link the PsychoPy experiment back to Qualtrics for the debrief.

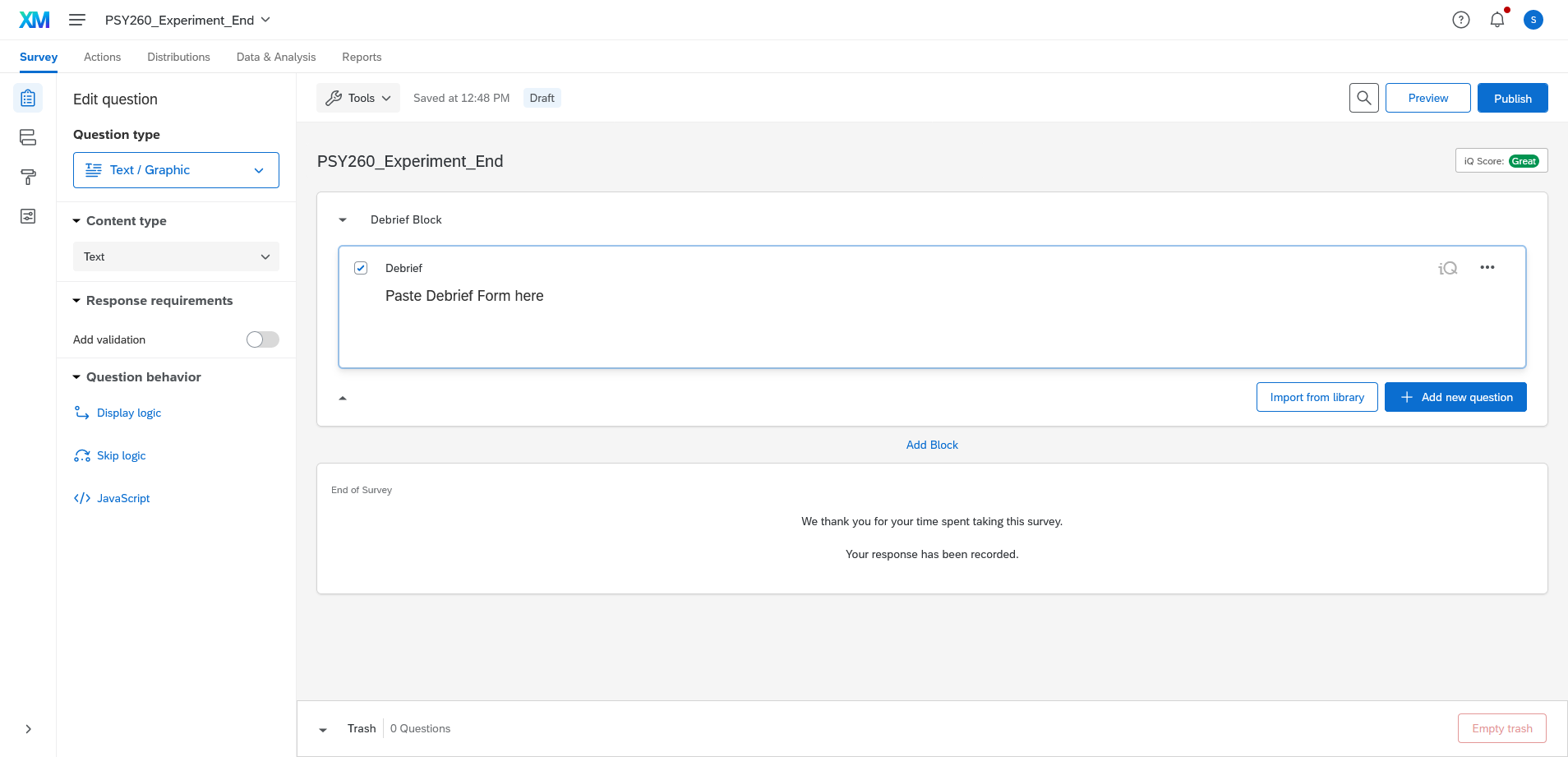

First we will need to create a new survey in Qualtrics called PSY260_Experiment_End. This will be where we store our debrief.

52 - Creating the debrief in Qualtrics.

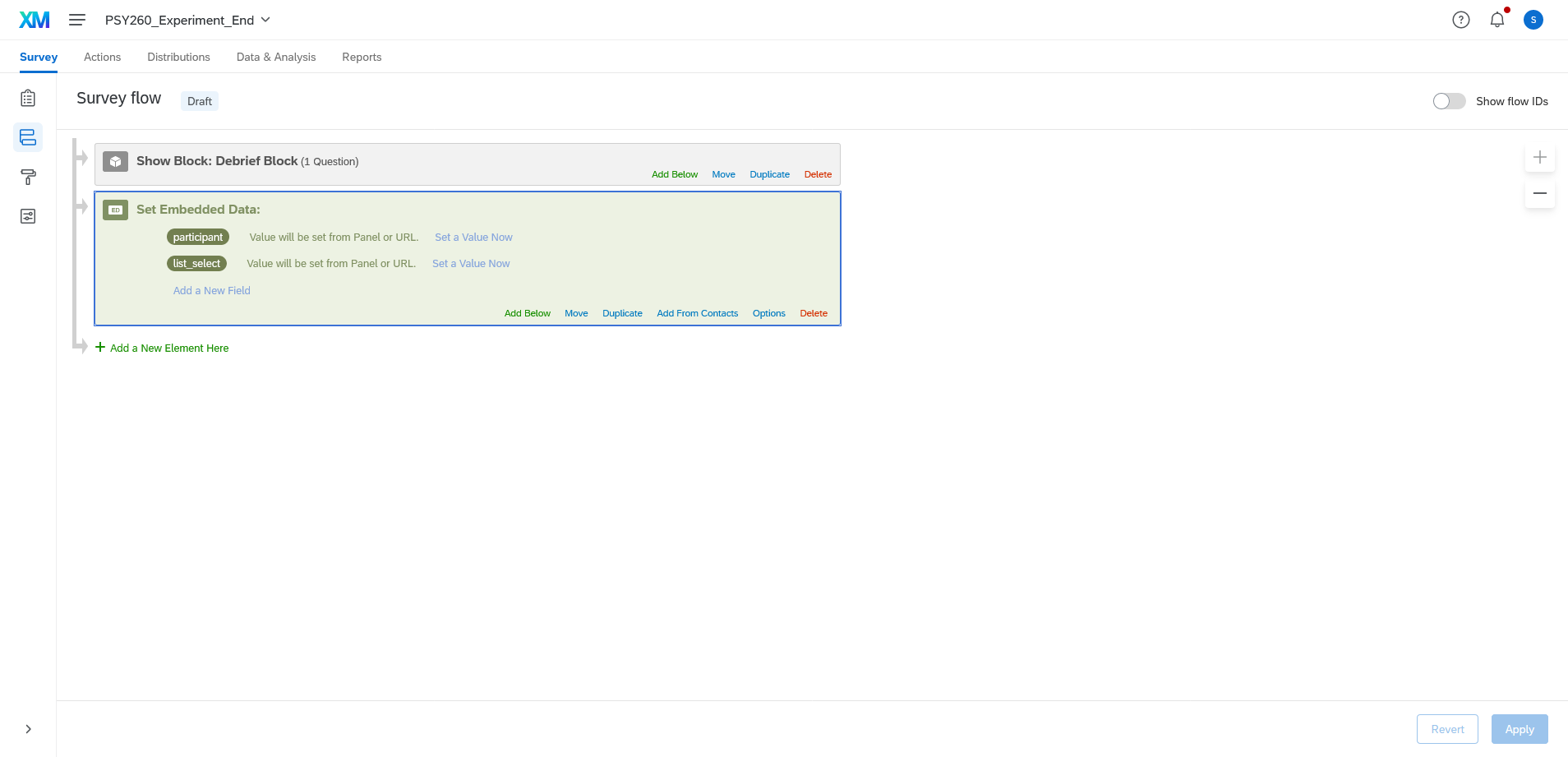

We want the debrief form to also collect the participant code and list_select variables. To do this, click on the survey flow and then click Add a New Element Here and select Embedded Data.

In the box labelled Create New Field or Choose From Dropdown type in participant (that’s your variable name for the participant IDs). Below this click Create New Field or Choose From Dropdown again and type in list_select (the list assigned to the participant). Don’t add any values here. Qualtrics will populate these variables from the URL that is provided.

53 - Adding the variable names to the debrief.

Now we need to add a way for the experiment on Pavlovia to link to Qualtrics. We will do this back in PsychoPy. First, we need to get the anonymous URL code for the debrief. Do this by publishing the study or going to Distributions at the top of the form, go to Web, and then select the anonymous link.

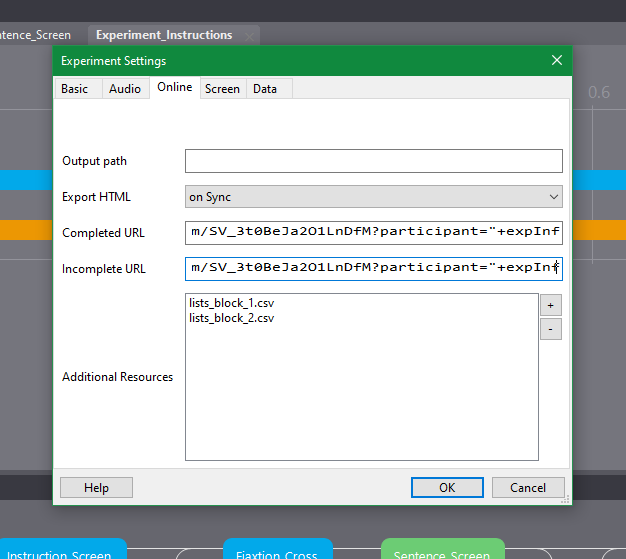

Then go back to your experiment in PsychoPy and add the URL redirect and resync the study to Pavlovia. Do this as follows:

Get the link to debrief survey from Qualtrics (described above).

Go to your experiment in PsychoPy.

Click the settings icon (cog) and go to the Online tab in the popup window.

Paste the code below into both the Completed and Incompleted URL data fields and change the text in bold to your URL from Qualtrics.

Make sure your link looks like:

$"**YOURURL**?participant="+expInfo['participant']+"&list_select="+expInfo['list_select']An example would look like this: $“https://sunduni.eu.qualtrics.com/jfe/form/SV_3t0BeJa2O1LnDfM?participant=”+expInfo[‘participant’]+“&list_select=”+expInfo[‘list_select’]

54 - Adding the URL redirect from PsychoPy to Qualtrics.

Save the study in PsychoPy. Then resync the study to Pavlovia by clicking the internet symbol (web globe) with the sync icon. Make your commit message clear, something like “Added URL redirect from PsychoPy to Qualtrics for the debrief”.

Now we should run through the study from the beginning to check that everything links up. we do this by previewing the PSY260_Experiment_Start survey.

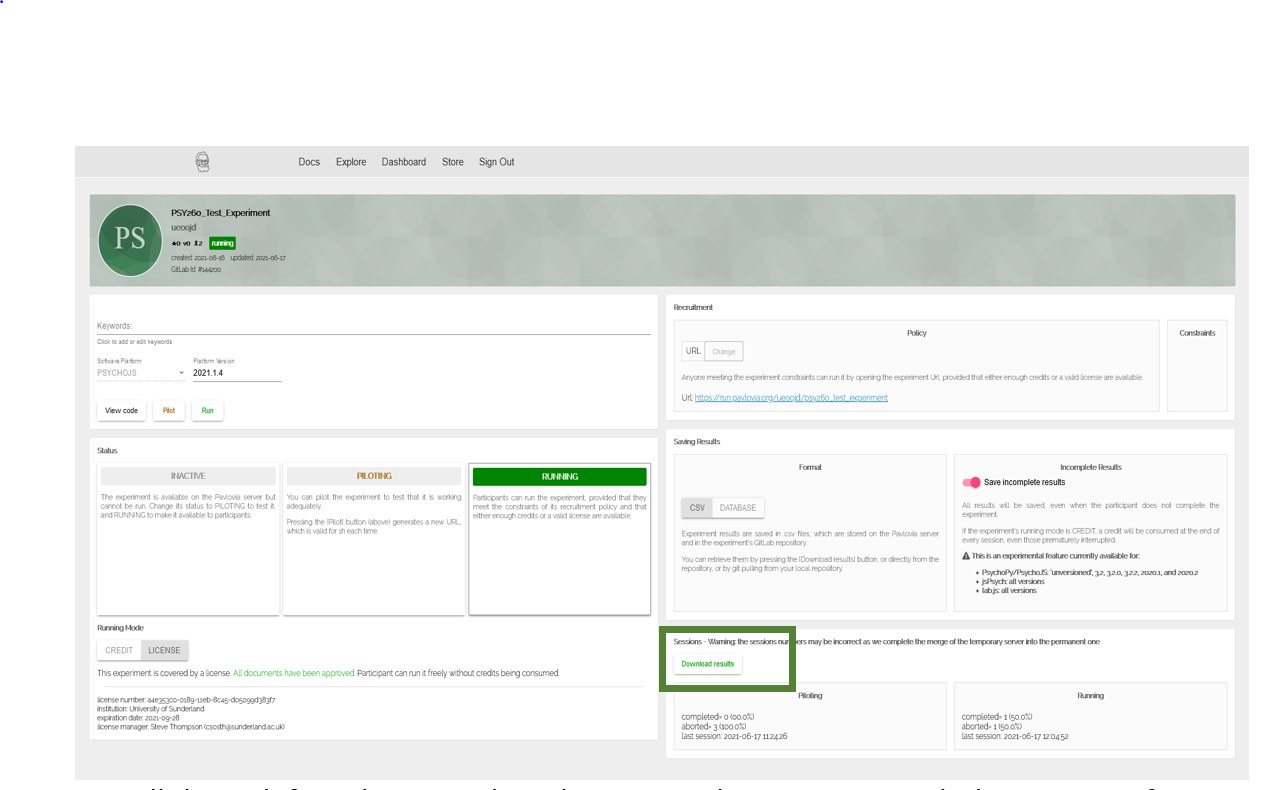

Once data is collected you can download it by going back to the experiment on Pavlovia and clicking download results. This will download a zip folder with a csv file for each person that took part. To view and clean the results make sure you extract/unzip the folder first. If you don’t this may cause some problems with the data.

Check your data to make sure you have everything expected following the assignment of participants to lists. For example if you tested your study in list 1 are the items actually from list 1 in the data? Have you recorded reaction times?

55 - Downloading results from Pavlovia.

Once you have confirmed your data is being collected properly, you are ready to start collecting the data from real participants. First, delete any test data from Pavlovia and Qualtrics, and then share your link with others to take part!

Make sure you have ethical approval to run the study before performing data collection in full.

Good luck!